The Boston Consulting Group and CAST, a leader in software analysis and measurement, have established a partnership to help companies address functional, data, and technical complexity in their IT landscape and operations. This article describes the problem and its solutions.

Complexity can be crippling in IT. Functional, data, and technical complexity can be a big barrier to digital transformation—especially for companies that have expanded internationally or by M&A, or evolved over generations of hardware and software advances. The difficulty of addressing this complexity helps explain why, in sectors such as financial services, venture capital–backed start-ups are circling traditional companies sharklike, sensing the blood of inertia in the water. Digital banking start-ups have received more than $65 billion in equity funding since 2005, including more than $3 billion during the first quarter of 2016.

A digital transformation is a huge undertaking, even under favorable circumstances; complexity magnifies the challenge. BCG research has identified four root causes of complexity, ranging from rapid business growth to growth by acquisition to lack of clarity regarding sourcing models. (See “ Simplifying IT to Accelerate Digital Transformation ,” BCG article, April 2016.) Companies seeking to transform existing infrastructure and applications (as opposed to building greenfield functionality) that do not first tackle their functional, data, and technical complexity issues are setting themselves up for failure.

One approach to attacking complexity is to use the application and data simplification lever of BCG’s Simplify IT framework, which focuses on simplifying the data landscape and consolidating and decommissioning applications, and on clearly defining interfaces—or replacing them with less complex alternatives. Our experience with some 500 companies that have used the Simplify IT approach shows that those that do attack functional, data, and technical complexity achieve cost savings of 15% to 20% of their total IT budgets while increasing their agility and reducing risk. The effort also opens the door to new capabilities, such as omnichannel customer interactions, and helps businesses prepare themselves for more-comprehensive transformation. Yet many companies ignore or overlook this essential task.

Reducing functional, data, and technical complexity involves three key actions:

- Identifying where redundancies reside

- Assessing and improving key software characteristics (especially changeability) and risk factors

- Measuring the impact of IT simplification activities in terms of systems processing capacity, frequency of operational or security failure, total cost of ownership, time to market, and business value

Identifying Functional and Data Redundancy

Any global company that has gone through a merger or acquisition, or has shifted over time from a decentralized operating structure to a centralized one, will inevitably have software redundancies in its IT systems. One company we worked with had 18 different time-reporting systems. Others have accumulated multiple redundant routines inside one computer program. Such redundancies increase the number of applications and the complexity of the data infrastructure that the company must maintain while providing little or no incremental value. Finding, merging, and removing redundant data elements and functionality will improve IT’s responsiveness to business requirements and will significantly reduce the amount of work (and, therefore, the cost) of system enhancement and digitization projects.

Since applications and systems often span multiple business functions, senior leadership is essential to achieving change, and executives at the corporate, group, or CIO level must take ownership of the process. Many redundancies are not visible to individual business unit managers, or cover more than one business unit, and senior-level authority is necessary to address them. Once the redundancies are identified, individual business units and application owners can help prioritize subsequent actions.

The key to our approach is to look for redundancies through the lens of data elements and transactions. We define a transaction as any end-to-end interaction with a user or a client system that either (1) provides the user with information from a system or record, or (2) modifies a system or record on the basis of a user’s request. A user accessing his or her credit card statement online is one example of a transaction; a user making an online credit card payment is another. All transactions rely on some underlying data model to deliver the required functionality.

Approaching redundancies from this perspective provides objectivity, which in turn facilitates strategic discussion of issues such as what elements the ideal application(s) should include, which user transactions can be removed, which third-party modules can be decommissioned, and how applications can be merged or retired.

Assessing and Improving Software Changeability and Risk Factors

To achieve greater functional simplicity, business unit leaders and CIOs must measure and improve the changeability of their business-critical applications. Changeability is a single quantifiable metric that assesses the ease with which an application can be configured, modified, or enhanced to support the digitization of business. It is a critically important consideration for a company because it determines the speed at which the company can implement change. One way to think about it is this: if the company were a vehicle, high changeability would be equivalent to having a tight turning radius, whereas low changeability would correspond to needing much more space and time to turn around successfully.

Companies must determine which of their applications are integral to the digital transformation, assess each application’s overall role and importance, and measure the changeability of the applications that will form the chassis of the transformation. Companies can then make informed decisions about which applications they can update for the digital environment at reasonable cost and where it may make more sense to seek alternative solutions. In this way, companies stay focused on process and technology improvements that truly have a significant impact. Key stakeholders in application changeability often include the application owners, the head of application development, and the head of enterprise architecture.

Multiple factors typically determine the degree of software changeability:

- Application architecture complexity

- Component coupling and cohesion

- Code documentation and complexity

- SQL (Structured Query Language) complexity

- Compliance to programming best practices

- A profusion of “dead code” that users no longer invoke

Wherever possible, it is critical to automate the delivery of the metrics that assess progress, so that updates are presented regularly and promptly and are not subject to conscious or inadvertent human manipulation. Automation of measurement promotes objectivity and speed, and creates a steady cadence of assessments independent of individuals’ workloads and human interference. Summarizing the technical elements above into a single standardized “score” is an ideal method for tracking the improvement in changeability over time.

Taking action on changeability is a process that involves multiple participants. A good first sequence of actions is to calculate a baseline for a portfolio of business-critical applications, identify the applications that scored in the lowest quartile of the changeability assessment (based on internal or industry benchmarks), and then empower the application owners, project managers, and enterprise architects to work collaboratively to create concrete plans to enhance, replace, or simply do away with those applications. A comprehensive cross-business-unit roadmap helps manage the overall effort.

Beyond assessing changeability, companies will want to apply software risk indicators—specific metrics that can help in evaluating the state of applications. Improving these metrics, or removing flaws that undermine the software’s effectiveness, can clear the way for software that is better suited for accelerated transformation. Metrics such as reliability, performance efficiency, and security examine nonfunctional properties of software. They can be gathered only by analyzing the composition of the application in its entirety. One major US consumer bank used this approach in its retirement services unit, where the core system consisted of a half-dozen applications. Increasing changeability, reliability, and efficiency over an 18-month period led to a marked increase in the number of projects delivered—and a steep drop in defects.

Reliability is a measure of the code’s ability to handle adverse events and peak loads without failing or corrupting data. Applications with high reliability will continue to operate well under stressful conditions; those with low reliability will encounter atypical problems. For example, a transaction in which one or more application server nodes can fail without producing any disruption of service has a good degree of reliability.

Performance efficiency examines the code for conditions that cause the program to perform poorly. An online transaction that requires extraneous trips between the application server and a database has poor performance efficiency. As a business concentrates on a smaller number of applications, the retained applications must process more information. Any weaknesses in scalability or performance efficiency will then become evident.

Security is a major concern of every CIO. Auditing for vulnerabilities identified by the Common Weaknesses Enumeration (CWE), developed under the auspices of MITRE and incorporated into Consortium for IT Software Quality (CISQ) standards, will reduce a company’s exposure to security weaknesses that might derail otherwise successful business changes.

For more on software metrics, please visit CISQ .

Measuring the Impact

Defining metrics is not enough. To be useful, the metrics must be collected, evaluated, and acted upon. Perhaps most important, they must be leveraged to track the ultimate goal of transforming the impact of a company’s IT function throughout the organization.

Digital transformation projects involve large investments and can be very risky. To achieve success with such a project, a company must be precise about its objectives and must continually measure progress against them. A commitment to continual measurement helps the company maintain focus on the original scope of the project, prevents diversions, and limits delays. It also permits quick intervention and redirection when problems arise, and demonstrates the value of the investment to business owners and top management.

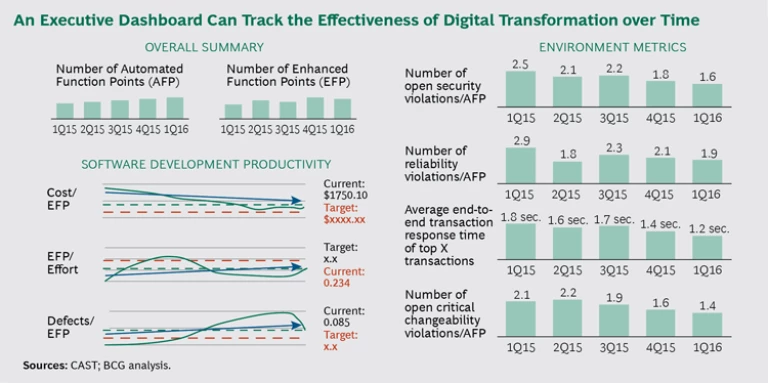

A transformation project typically has several related objectives: shortened time to market, reduced cost, and expanded revenue opportunities, for example. Often, it also has functional objectives, such as enabling mobile applications to access multiple discrete databases through Web services. Progress toward all objectives must be measured. Creating an executive dashboard for each system or application involved in the transformation gives companies greater control over the outcome of digital transformation initiatives. Some metrics, such as business outcomes, can be assessed only on a “before-and-after” basis; other, supporting metrics, such as applications’ changeability and risk indicators, can be measured and adjusted throughout the transformation. (See the exhibit.)

Companies should avoid using subjective or variable metrics, such as the ratio of timely delivery (which the estimation process can obscure), or cost per project (which depends on project scope). The most useful metrics provide a balanced view of the changes and tie them to real business-value improvement. Establishing a single standard unit of output is critical to measuring the throughput of development teams and the quality of the deliverables. (See the sidebar, “Standardized Output Measurement: The Path to Right Decisions.") Including automated tools eliminates subjectivity, and using a dashboard allows the whole organization to see the progress in an unbiased, consistent manner.

STANDARDIZED OUTPUT MEASUREMENT : THE PATH TO RIGHT DECISIONS

Projects have different scopes and use different technologies that require varying amounts of time to deliver similar functionalities. Without standardization achieved by (for example) establishing a single standard unit of output, an operation such as comparing one project with another, or comparing the time spent on writing 10,000 lines of code in JAVA with the time spent on writing 10,000 lines of code in C++, provides no more business insight than comparing the average height of developers on different teams.

Function points, which measure business functionality within software, are an example of a standard software output unit. Standardized by the International Function Point Users Group (IFPUG), function points are technology-agnostic, and IT leaders widely accept them as a best-practice sizing measurement for software. Further, the Object Management Group (OMG) has defined an IFPUG-based, automated function-point measurement protocol that enables function points within software to be counted consistently, sustainably, and affordably.

Thanks to the standardization of development output, it is now easier to compare the cost differences across different parts of an organization—for example, the person-days per function point of Team A versus the person-days per function point of Team B. This standardization works because, regardless of any differences in technologies and scope of Team A and Team B, it provides an objective way to compare the throughput of developers in both sections of the organization.

In its role of supporting useful measurement of changeability, reliability, performance efficiency, security, and other nonfunctional attributes, the measurement system should incorporate the following characteristics:

- Standards-based measurement, such as CISQ or International Organization for Standardization (ISO)

- System-level software analysis that measures the entire application, not the sum of the code units

- Consistent measurement across technologies, for comparability

- Industry benchmarks of changeability, reliability, performance efficiency, and security

- Automated, consistent measurement across releases for the core apps portfolio

Anticipating all the digital systems and processes that will become available in future years is impossible, but we can be sure that the speed of change will forever increase. An important goal of any transformation initiative, therefore, is to increase the organization’s capacity to absorb change. Progress in system changeability and development throughput is an important indicator of success in the transformation journey.

Taking the Next Steps

Successful transformation entails balancing the need for speed with the organization’s ability to absorb change. The focus must be first on creating an environment that promotes decisions in line with the CIO’s objectives, and then on automating as much of that environment as possible so that teams can focus on orchestrating business change rather than on performing technical reporting. Essential steps include the following:

- Building a metrics baseline by which to track success, and then achieving agreement on the objectives

- Automating the reporting process to drive consistency and reduce emotion (regardless of methodology, consistency is crucial)

- Establishing business champions to facilitate quick, accurate communications and to resolve business issues

Once implementation starts, the primary task is to measure, adjust, and then measure and adjust again. Remember that progress toward the goal is more often made in incremental steps than in leaps. “We measure software quality at a structural level to make the right trade-offs between delivery speed, business risk, and technical debt,” says the CIO of a major US telecommunications company.

Dealing with data, functional, and technical complexity in the context of a digital transformation can be both complex and difficult. Following the process outlined here will improve a company’s chances of success and will help ensure lasting business value. CIOs who follow a fact-based approach can avoid many of the hazards that too often derail IT simplification and transformation initiatives.