Operations leaders routinely make critical decisions across the entire value chain. What combination of raw materials will minimize total cost? How can we plan production to maximize throughput? How can we schedule maintenance tasks to minimize disruptions?

Although such decisions typically involve complex tradeoffs, managers have often made them using rules of thumb or basic data analysis. Today, though, leaders can apply advanced analytics techniques—supported by cheaper computing power and improved data capture mechanisms—to make better-informed decisions that optimize value.

However, many operations leaders must climb a steep learning curve to understand the best ways to apply advanced analytics. For those without quantitative backgrounds, sorting through the hype and distinguishing among popular terms in the analytics field—such as big data, operations research, decision support, and Industry 4.0—can be a daunting task. Because these terms are often used synonymously, it is challenging for leaders to determine how they can employ each of these techniques to the best advantage. Indeed, many businesses are losing potential value because they cannot spot the opportunities to make the most of advanced analytics.

Building comprehensive expertise in the available analytics techniques is beyond the call of duty for most operations leaders. However, it is essential to gain a better understanding of how to use advanced analytics to inform business decisions.

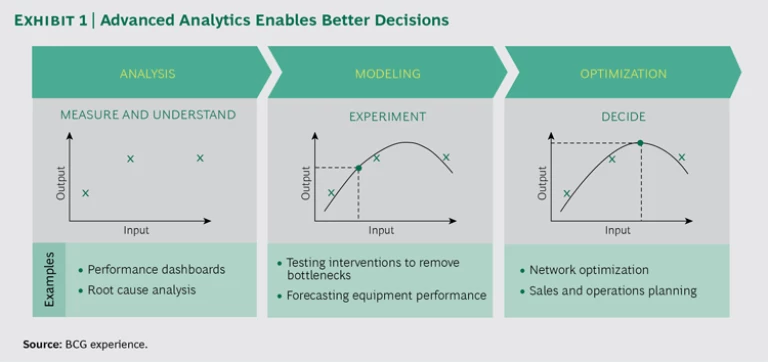

We recommend thinking about analytics in terms of three categories: analysis, modeling, and optimization. These categories follow the application of analytics from performance measurement to predictive modeling to optimal decision making. (See Exhibit 1.)

Analysis: What Happened in the Past?

The most basic use of analytics entails gathering and analyzing data about the company’s past performance. This backward-looking analysis describes and summarizes a selection of KPIs, typically over time. In doing so, the analysis provides insights regarding the factors that drive value; it can also suggest interventions to increase value. By gaining this visibility, the company also obtains a fact base for modeling future performance and making decisions that optimize value creation.

The fact base is typically presented using business intelligence software (such as Tableau, QlikView, or Tibco Spotfire). The dashboards created by such software give nonspecialists the ability to perform complex data analysis. With a few clicks, a manager or executive can generate an impressive array of insights from millions of data points. Only five years ago, a specialist with computer science skills would have needed hours to generate such extensive insights.

On the most basic level, companies can use the insights to identify where value may be “leaking” from the business. A manufacturer we worked with found that welders’ productivity is 15% lower on Fridays, for instance. Another company found that its sales staff typically provided the maximum authorized discount to customers rather than negotiating on price—a common problem throughout businesses. Insights like these point to the need for corrective actions, such as enhanced approaches to motivating workers or improvements to training programs.

Modeling: What Does the Future Hold?

A model is an abstract representation of a business. A company can use a model to predict how it might perform in the future under different scenarios. Modeling makes it possible for companies to experiment with their operations in a risk-free manner. Companies can test different strategies, and make mistakes, in a virtual representation of reality.

A company must be able to use models effectively to test how changes to variables in the business environment will affect company performance. And, because business leaders are often skeptical about the accuracy of the results, analytics teams must be prepared to demonstrate that models are realistic. For a model to be realistic, it must be fit for purpose—that is, it must be a sufficiently accurate representation of the business system. The availability of the appropriate data is also a prerequisite.

Many different modeling tools exist, and the correct tool for a specific application depends on the characteristics of the system being modeled. For example, bulk commodity supply chains are typically modeled using “discrete event simulation,” a technique designed to emulate systems that have complex dynamics.

Applications for supply chains and equipment performance illustrate the potential for using models to inform decision making.

Simulation Models for Supply Chains. Supply chains often have complex, dynamic characteristics, such as variability arising from breakdowns or changes in demand or supply patterns. They typically require a buffer or inventory to manage this variability. A model of a supply chain must emulate these dynamics.

For example, we have used a supply chain model to help mining companies decide where to invest capital. The model allows companies to test what happens if they change variables, such as the number of trains or the frequency of conveyor belt breakdowns. A leading mining company used the model and discovered that its operations could be served by a single-track rail line, rather than the double-track line proposed by project engineers. This insight enabled the company to avoid a planned $500 million capital expenditure.

Supply chain models are also useful for testing different operating strategies or philosophies. For example, a port authority applied the insights from modeling to change the rules by which ships were brought through a tidally constrained channel. Applying the new rules enabled the port to increase its capacity by 5%.

Machine Learning for Equipment Performance. Machine-learning techniques are used to model very complex systems, such as jet engines and copper smelters. These techniques use historical data to learn the complex, nonlinear relationships between inputs and outputs. We used a machine-learning algorithm to help a metals company model the performance of a copper smelter, including the highly complex relationships among temperature, oxygen, flux, and feed rate. The predictive insights generated by the machine-learning algorithm proved superior to those obtained from models developed by the company on the basis of physics and chemistry. The company applied the insights to improve yield by 0.5% to 1.0%, amounting to tens of millions of dollars in additional value.

Optimization: What Decisions Maximize Value?

The payoff from applying analytics arises from using the results of modeling to make decisions that optimize value creation. By experimenting with a model to test the results of different decisions, a company can often determine the actions required to achieve the optimal outcome. However, some business problems involve such a complex array of variables that the potential solutions literally number in the trillions. Optimization techniques help companies determine the solutions to these highly complex business problems.

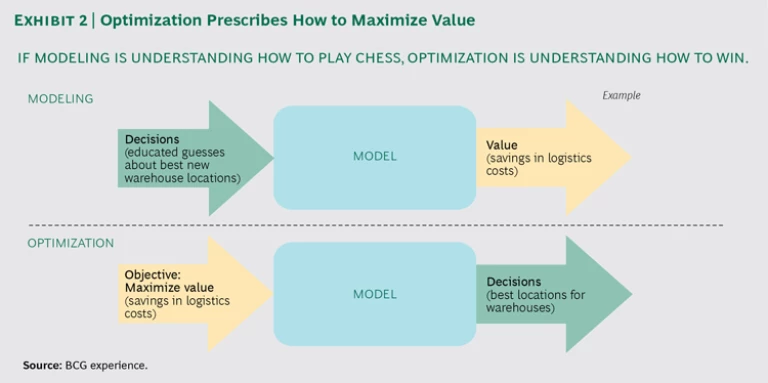

An optimization technique is a mathematical algorithm that calculates which decisions will maximize value in a given set of circumstances, taking into account the objectives and the applicable business rules or constraints. These techniques are prescriptive: they tell companies what to do. In modeling, the input is a set of decisions and the output is the value that would result from implementing these decisions. Optimization reverses this relationship: the input is the value-maximizing objective and the output is the set of decisions that would achieve the objective. (See Exhibit 2.)

The sophistication of optimization techniques has increased exponentially during the past decade, making it possible to solve a much wider variety of problems. The following examples illustrate the scope and potential for value creation for companies across industries:

- A Foundry. Foundry operations are remarkably complex, making it nearly impossible for an operator to determine the optimal schedule of tasks. One foundry applied an optimization algorithm to overcome this complexity. Inputs included the foundry’s goal for the number of components manufactured per week as well as constraints relating to the availability of labor and material. The output of the optimization was the order in which components should be manufactured. By implementing this decision, the foundry increased capacity by 20% while reducing delivery times.

- A National Broadband Network. A national broadband network is engaged in a multiyear project to roll out inter-net service across the country. The network comprises a number of technologies, whose cost and speed vary significantly, as does the number of engineers, construction workers, and managers required to build and maintain them. To determine the optimal mix of technologies and the schedule for rolling them out, the company applied an optimization algorithm. The objective was to maximize net present value. The constraints included the number of engineers available and limitations on debt. The output was a fully optimized roll-out plan that specified which technologies to use in which locations and how to sequence the rollout. The optimized plan has enabled the network to reduce its funding requirement by $2 billion.

- A Poultry Company. A poultry company had been using rules of thumb to make complex decisions about how to produce and process birds in order to most profitably meet its customers’ needs. Poultry production is a complex business with challenging constraints. For example, suppose the sales team asks the operations team to produce an additional 100 tons of breast meat. Boosting production of breast meat by this amount will also generate an additional 150 tons of leg meat and 40 tons of wings. Significant waste will result if the sales team does not consider whether it can sell the additional tonnage of leg meat and wings. To determine how to address this type of complexity while meeting customer demand, the company used an optimization algorithm. The output specified the quantity of each type of meat to produce in each factory, which size of birds to process, and how to most efficiently transport the products to customers. It also specified which customers were not profitable to serve. The optimized approach is expected to generate additional EBIT of more than $20 million. The approach has allowed the business to serve a large new customer it had previously believed it lacked the capacity to serve. The additional business is worth millions of dollars of margin, and demand can be met with no additional capital investment.

- A Steel Producer. A steel producer sought to redesign its supply chain to meet customer demand at minimal cost. Taking into account the capacity constraints of production lines and warehouses, an algorithm specified the optimal supply chain for 2025—one that would enable a flow of goods across the producer’s network at the lowest cost. The producer has already reduced logistics costs by 10% through better decisions about which products to make where and how to distribute them.

Getting Started

As a first step to enhancing the value derived from analytics, a company should review its value chain to identify all the business decisions it is currently making. Look for decisions that are:

- Difficult to make owing to their complexity

- High margin, because the difference between good and best (that is, optimal) has a material impact on value

- Currently being made using gut instinct or unsophisticated analytics tools (such as spreadsheets)

If all three circumstances exist, analytics can almost certainly be valuable to support decision making. Having identified the decisions to prioritize, most companies will need new expertise—either in-house or provided by a third party—to match their business problems to the most appropriate analytics technique. When building an in-house analytics function, it is important to create clear linkages and feedback mechanisms between the field and analytics teams to ensure that the new function is effective and continues to add value over time.

Companies should be mindful that developing support tools to compute the optimal decisions represents only a small part of the work necessary to capture the benefits of analytics. To convert insights into actions, a company must establish processes that enable company-wide, optimal decision making. It must also ensure that decision rights and accountabilities promote the use of these processes and the analytics systems. Finally, it must establish KPIs that incentivize employees to use these advanced techniques and implement the recommendations from analytics teams.

Leading companies are already capturing significant savings and a competitive edge from applying advanced analytics in operations. Today’s applications are just the starting point. In many industries, advanced analytics has the potential to transform

how companies manage their operations

. Companies that fail to understand and pursue the opportunities risk falling permanently behind the leaders in their increasingly competitive markets. Now is the time to embrace advanced analytics as a fundamental enabler of operational excellence.