Artificial intelligence (AI) has beneficial applications in many areas of government, including traffic management—with data collected in real time from traffic lights, CCTV cameras, and other sources enabling traffic flow optimization—and customer service centers manned by robots that use AI to answer questions. Algorithms and machine-learning techniques, in which computers analyze large amounts of data to detect statistical patterns and develop models that can be used to make accurate predictions, are rapidly becoming key tools for governments.

However, despite the obvious opportunities for efficiency and effectiveness, the role of AI, automation, and robotics in government policy and service delivery remains contentious. For example, can you prevent algorithms based on historical data from perpetuating or reinforcing decades of conscious or unconscious bias? When is it acceptable to use “black box” deep-learning models, where the logic used for decisions cannot possibly be explained or understood even by the data scientists designing the underlying algorithms?

To gain insights into citizens’ attitudes about and perceptions of the use of AI in government, BCG surveyed more than 14,000 internet users around the world as part of its biannual Digital Government Benchmarking study. BCG asked this broad cross section of citizens to tell us:

- How comfortable they are with certain decisions being made by a computer rather than a human being

- What concerns they have about the use of AI by governments

- How concerned they are about the impact of AI on the economy and jobs

What We Found

Our key findings center around the types of AI use cases survey respondents indicated they would support, the way that attitudes about government and demographics affect support, and the ethical and privacy aspects of using AI in government.

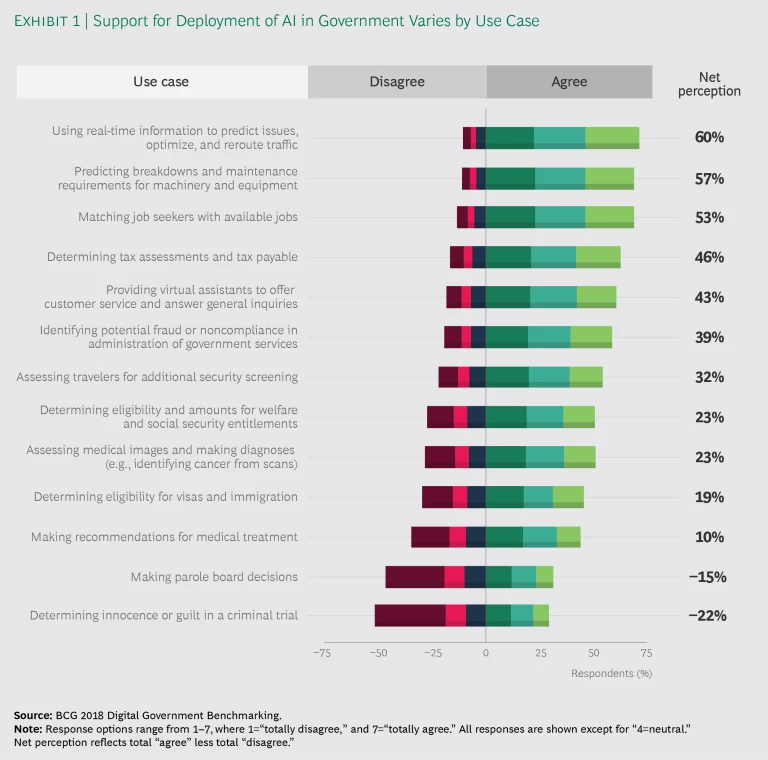

- Citizens were most supportive of using AI for tasks such as transport and traffic optimization, predictive maintenance of public infrastructure, and customer service activities. The majority did not support AI for sensitive decisions associated with the justice system, such as parole board and sentencing recommendations.

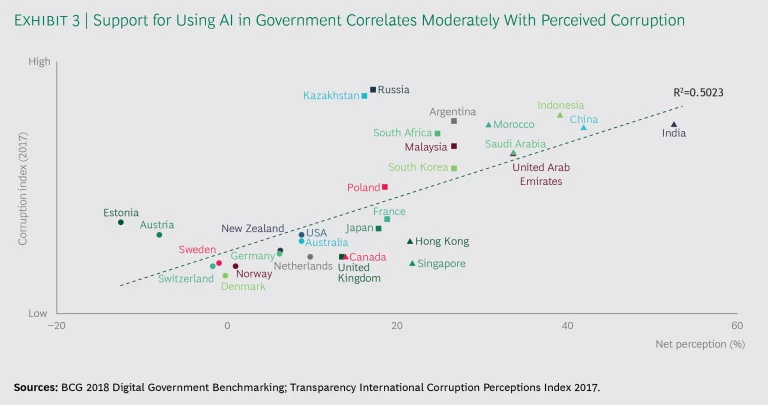

- People in less developed economies and places where perceived levels of corruption are higher also tended to be more supportive of the use of AI. For example, the citizens surveyed in India, China, and Indonesia indicated the strongest support for government applications of AI, while the citizens surveyed in Switzerland, Estonia, and Austria offered the weakest support.

- Demographic patterns tend to mirror general attitudes toward technology. Millennials and urban dwellers, therefore, demonstrated the greatest support for government use of AI, while older people and those in more rural and remote locations showed less support.

- Citizens were most concerned about the potential ethical issues, as well as lack of transparency in decision making, and expressed significant anxiety about AI’s potential to increase automation and the resulting effect on employment.

A Cautious Optimism Prevails

Citizens generally feel positive about government use of AI, but the level of support varies widely by use case, and many remain hesitant. Citizens expressed a positive net perception of all 13 potential use cases covered in the survey, except decision making in the justice system. (See Exhibit 1.) For example, 51% of respondents disagreed with using AI to determine innocence or guilt in a criminal trial, and 46% disagreed with its use for making parole decisions. While AI can in theory reduce subjectivity in such decisions, there are still legitimate concerns about the potential for algorithmic error or bias. Furthermore, algorithms cannot truly understand the extenuating circumstances and contextual information that many people believe should be weighed as part of these decisions.

The level of support is high, however, for using AI in many core government decision-making processes, such as tax and welfare administration, fraud and noncompliance monitoring, and, to a lesser extent, immigration and visa processing. Strong support emerged for less sensitive decisions such as traffic and transport optimization. (See the sidebar “AI Can Improve Traffic Management.”) Also well supported was the use of AI for the predictive maintenance of public infrastructure and equipment such as roads, trains, and buses. Support was strong for using AI in customer service channels, too, such as for virtual assistants, avatars, and virtual and augmented reality.

AI Can Improve Traffic Management

AI Can Improve Traffic Management

Traffic congestion is a major challenge in global cities and is likely to intensify in the coming years due to population growth, urbanization, and demand for greater mobility.

Governments across the world are already using a range of technologies and approaches to harness the power of AI in tackling congestion on the roads, shortening journey times, and reducing carbon dioxide emissions.

The benefits are impressive. In Pennsylvania, Pittsburgh’s AI-powered traffic lights optimize traffic flow and have reduced travel times by 15% to 20% and carbon dioxide emissions by 20%. In China, vehicle traffic monitoring notifies authorities of incidents in real time and helps them to identify high-risk areas for congestion and collisions. This has led to traffic speed increases of 15% and identification of traffic violations with 92% accuracy.

Support emerged for the use of AI in medical diagnosis and image recognition (51% agreed), presenting significant opportunities for improving the speed and accuracy of medical diagnoses. (See the sidebar “Eye Disease Diagnoses Show the Power of AI.”) But citizens were slightly less confident about using AI to make medical treatment recommendations, and their attitudes toward AI in medical use cases also varied widely by country.

Eye Disease Diagnoses Show the Power of AI

Eye Disease Diagnoses Show the Power of AI

AI presents significant opportunities to improve the speed and accuracy of medical diagnoses. For example, two of the most common eye diseases are age-related macular degeneration and diabetic retinopathy. The optical coherence tomography (OCT) scans required to diagnose these diseases are highly complex, and professionals require years of specialized training to analyze and interpret the results. However, after training on fewer than 15,000 scans, Google’s DeepMind AI learning algorithms were able to make referral suggestions for more than 50 critical eye diseases with 94% accuracy. AI is already achieving and in some cases exceeding human performance, enabling faster and more accurate diagnoses and better treatment of patients with eye conditions.

On average, around one in five respondents answered “neither agree nor disagree” when asked about the use of AI for specific use cases. Governments should do more to engage, educate, and communicate with the public about the potential use cases, benefits, and tradeoffs of using AI. In many cases, citizens may not even be aware that their governments are already using AI to augment or automate some of these activities.

Support for Use of AI in Government Varies by Country

While the results of the survey show some clear trends, a deeper review reveals both correlations among and divergences between different locations and age groups.

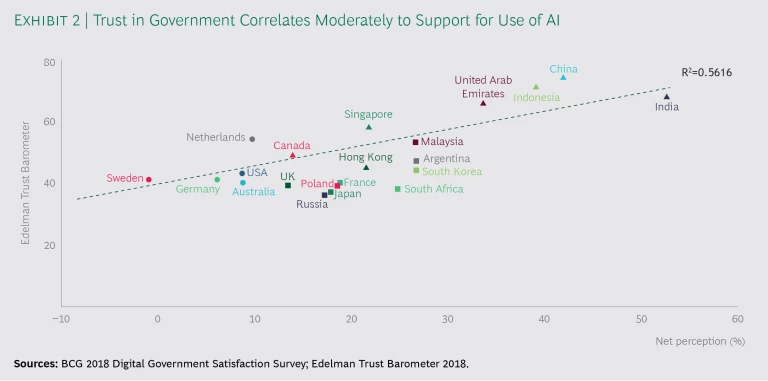

People in emerging markets tend to be more positive about government use of AI. We found that citizens in mature economies tend to show less support for government use of AI than those in emerging markets. For example, citizens surveyed in countries such as Estonia, Denmark, and Sweden, are least receptive to the use of AI, while the top three most supportive countries are China, United Arab Emirates (UAE), and Indonesia.

Support for government use of AI correlates moderately with trust in government. Trust in institutions is essential if governments are to gain the support needed to roll out AI capabilities. We found that the countries where citizens are most supportive of AI were India, China, Indonesia, Saudi Arabia, and UAE. This aligns closely with the countries that had the highest levels of trust in government on the 2018 Edelman Trust Barometer, the annual global survey. In descending order, the top four countries are: China, UAE, Indonesia, and India. (See Exhibit 2.)

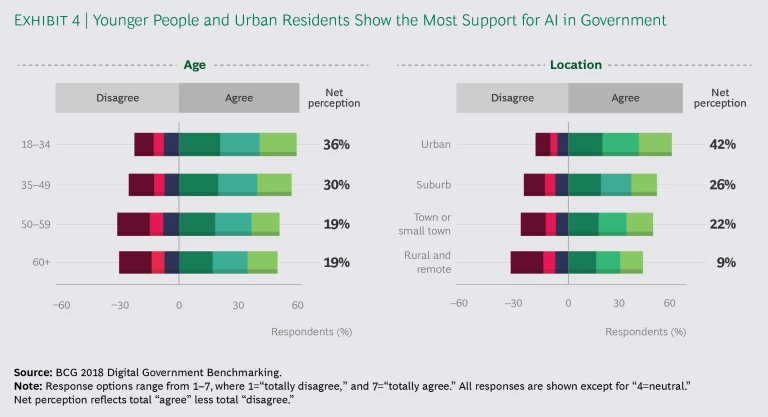

Our analysis also found that less developed economies and countries that have higher reported or perceived levels of corruption also tend to be more supportive of the use of AI: Saudi Arabia (57th), China (77th), India (81st), and Indonesia (96th). (See Exhibit 3.) This could be interpreted as a preference by citizens for AI-based decision making over human decision making where there is less confidence in the machinery of government. Millennials and urban dwellers are more supportive of the use of AI. Younger people (ages 18–34) were most supportive of the use of AI by government, with 57% in favor. Younger respondents were also less likely than older people to disagree with the use of AI (21% versus 29%, respectively). People living in densely populated urban areas were the most supportive of the use of AI, with 61% agreeing with its use, compared to people living in suburbs (52%), residents of towns or small towns (50%), and residents of regional, rural, or remote areas (43%).

Younger citizens and city dwellers also expressed the least worry about AI in response to the question: “What concerns you most about the use of AI by governments?” (See Exhibit 4.) While attributing causality to the different views across cohorts is difficult, our survey results tend to mirror population attitudes toward technology in other surveys, which usually reflect relative experience, digital maturity, and adoption rates. This suggests that support for use will naturally increase over time, but also highlights the need for governments to target communications to the concerns of different audiences to build support for adoption.

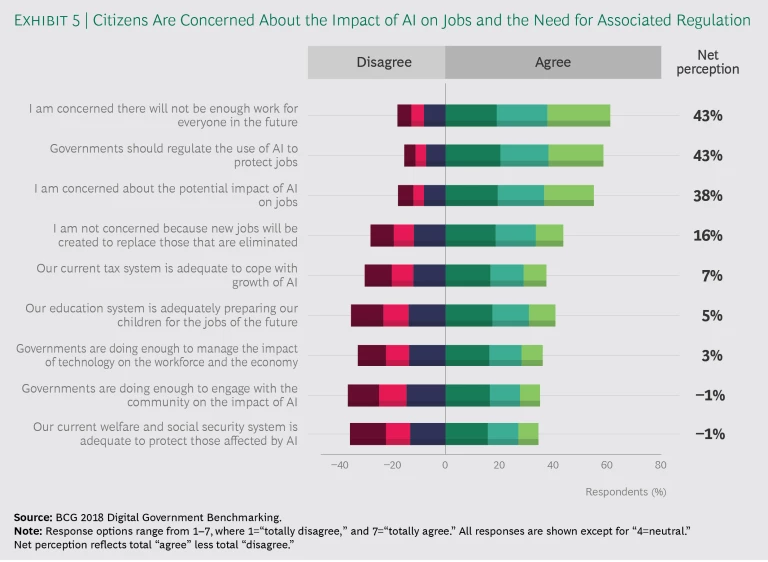

Addressing Citizens’ Concerns About AI

The ethical implications of AI use are among the top concerns of citizens. However, the overall level of concern is lower than expected. When asked about potential concerns around the use of AI by governments, 32% of citizens expressed concern that significant ethical issues had not yet been resolved, and 25% were concerned about the potential for bias and discrimination. The other major concerns were the perceived lack of transparency in decision making (31%), the accuracy of the results and analysis (25%), and the capability of the public sector to use AI (27%). The overall level of concern about government use of AI at this stage appears to be relatively low. This finding could be because adoption is still in its early stages or because there is a low level of awareness of the potential risks—or both. However, citizens are very concerned about the impact of AI on jobs. (See Exhibit 5.) When asked about the implications of AI for the economy and society, citizens expressed significant concerns about the availability of work in the future (61% agree), the need to regulate AI to protect jobs (58% agree), and the potential impact of AI on jobs (54% agree).

Respondents are relatively evenly divided between agreeing and disagreeing on whether their countries’ tax, education, and welfare systems are adequately set up for a world in which AI is pervasive. For example, if AI does in fact lead to a dramatic decrease in employment, how will welfare and education systems respond? What effect will that have on taxation and the way governments fund their activities? The same is true when it comes to whether governments are doing enough to manage the impact of technology on the workforce and economy or to engage with the community on the impact of AI.

What This Means for Government

The results of our survey have important implications for governments as they consider how they use and develop policies in relation to AI.

Use case selection and addressing ethical issues are paramount in assuaging citizen concerns. Citizens are clearly worried about the removal of human discretion in certain decisions, particularly in more sensitive domains such as health care and justice. Perceptions of bias and discrimination are major factors that affect use case selection. AI has the potential to reduce human biases—both cognitive and social—that influence human decision making. In cognitive biases, for example, humans rely disproportionately on the first piece of information they encounter, rather than weighing all information dispassionately. Social biases are based on prior beliefs and worldviews and sometimes manifest in discrimination. Algorithms have the potential to minimize both noise and bias from decision making. For example, algorithms can weigh all inputs exactly as instructed.

However, eliminating bias is very difficult. AI learns from data, much of which has been generated from human activity. Unfortunately, those activities include human bias; thus it is possible to create systems that magnify and perpetuate prejudices that are already present. Creating models free from that bias remains a significant technical challenge. For example, a study in the US showed the COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) system used to estimate the likelihood of recidivism in criminal offenders has racial biases. In some cases, such as black box models, it may be impossible to understand how a recommendation or decision was derived, and thus also to meet traditional government requirements for explainability, transparency, and auditability. This is especially true of the more complex tools such as neural networks and deep learning.

Governments will need to select carefully how and where they launch pilots and eventually scale them. When identifying use cases that will deliver the greatest benefit from experimentation, governments will need to balance the difficulty of implementation with the benefits, including the potential impact for citizens, the reusability and applicability of a use case to other needs, and the opportunity to reduce costs and free up resources for other uses.

Governments should also consider how to involve citizens in these pilots. Switzerland, for example, is exploring resettling asylum seekers in different parts of the country using AI. From late 2018, the Swiss State Secretariat for Migration and the nonprofit Immigration Policy Lab will test a new, data-driven method for assigning asylum seekers to cantons across the country. Asylum seekers in the pilot will be assessed using an algorithm designed to maximize their chances of finding a job. The algorithm will allow officials to resettle individuals in the canton that best fits their profile, rather than allocate them randomly. The program will then follow these asylum seekers over the next several years, comparing their employment rates to those of others who entered the country at the same time.

Pilots should be publicized, tracked, and reported on, not only to demonstrate the value of AI but also to build public trust and create transparency. Communication and education will play a large part in building this trust as governments roll out increasingly advanced applications of AI to their policy and delivery environments.

Trust and integrity in government institutions must be built—or rebuilt. Transparency is very important to citizens and should be high on the agenda for governments. This means being clear about the ethical implications of AI, as well as about how it will—and will not—be used.

Governments need to underscore the role of humans in government decision making. In some cases, AI alone can be used to make decisions, but in many others AI should augment human judgment and support decision making. Checks need to be put in place, as well as mechanisms through which citizens can raise concerns. And governments should measure and be transparent about the quality of AI recommendations.

Appropriate oversight of AI will be critical if citizens are to have confidence in its use by government. Legal and regulatory frameworks may need to be enhanced, and a code of conduct or rules for the ethical use of AI code should be created. Transparency helps ensure the algorithms that underpin AI are reliable and protected from manipulation, but the difficulty of providing it increases with the complexity of the algorithms.

Regulation needs to be carefully thought out, balancing the need to limit government use of individuals’ personal data and AI, while allowing government to innovate in the use of the technology. Putting rules and accountability frameworks in place will reassure citizens that AI is being used responsibly and ethically. Given the importance of AI to future economic, geopolitical, and security positioning, it is critical that governments have the support of their citizens for its use.

Addressing genuine concerns about the future of work is critical to building citizen acceptance of AI. The confluence of recent developments in big data, cloud-based computer processing power, and neural networks (which are modeled loosely on the human brain and nervous system to recognize patterns) has fueled and accelerated the developments in AI. And with the news media and others focusing on the rise of robots and their potential effect on humans, it is not surprising that potential job losses resulting from the use of AI emerge as one of citizens’ key concerns. The fear of “technological unemployment” as machines become dominant in the economy is real. Whether this threat will materialize is another matter entirely. However, unless governments address fears of potential job insecurity and general uncertainty—through public dialogue and policies that provide a safety net for those most affected—these perceived threats could create a significant barrier to the development of AI.

Citizens should be supported and empowered to navigate new career pathways through lifelong learning strategies and more tailored career guidance. Governments should prepare for the substantial workforce transitions ahead through policy measures such as the expansion of social safety nets, provision of more targeted reskilling and upskilling programs, and more effective job matching and job placement services.

Government needs to build AI capabilities inside the public sector. As they adopt AI, governments need to educate themselves and prepare for wider AI rollout by building internal capabilities and setting clear data strategies such as those being pursued by Singapore. (See the sidebar “Case Study: Singapore Smart Nation and Digital Government Group.”) The technologies are evolving rapidly, which means ministers and public servants need a basic understanding of AI. (See “ Ten Things Every Manager Should Know About Artificial Intelligence ,” BCG article, September 2017.) While this may take time through recruitment and upskilling, they can accelerate the acquisition of expertise by embarking on partnerships with companies, startups, universities, and others. Identifying the right mix of current and future skills will be critical to enabling government organizations to scale up their AI-related efforts.

Case Study: Singapore Smart Nation and Digital Government Group

Case Study: Singapore Smart Nation and Digital Government Group

Singapore uses AI and advanced analytics in areas such as mobility, health, and public safety. Formed in May 2017, the Smart Nation and Digital Government Group leads efforts across government to integrate cutting-edge technological capabilities into the provision of government services.

The group promotes application of AI technologies across government, including coordinating between agencies, industry, and the public; developing digital enablers and platforms; and driving the digital transformation of the public service.

As government agencies employ more sophisticated technologies, data privacy will become a priority. In Singapore, the Public Sector (Governance) Bill of January 2018 formalized agency data-sharing frameworks, including conducting regular audits, removing personal identifiers where appropriate, limiting access to sensitive personal data, and introducing criminal punishments for data-related offenses.

Government uses of AI in Singapore are diverse and include:

- Assistive Technology, Analytics, and Robotics for Aging and Health Care. RoboCoach (a robot) helps to provide physical and cognitive therapy to seniors who have suffered strokes or have disorders such as Alzheimer’s or Parkinson’s.

- Smart Homes. Smart devices available in some homes include a system to monitor the elderly and provide peace of mind to caregivers, and a utility management system that helps with household utilities usage.

- Preventing Corruption in Procurement. AI algorithms analyze HR and finance data, procurement requests, tender approvals, and workflows to pick up patterns to identify and prevent potential corruption in government.

- Matching Job Seekers with Positions. Machine learning and text analysis identify skills required for jobs and prioritize search results according to the relevance of the job seeker’s skills.

- Traffic Management. An expressway monitoring and advisory system uses technology to detect accidents, vehicle breakdowns, and other incidents, and provides real-time travel time information from the expressway’s entry point to selected exits.

- Lamppost-as-a-Platform. Sensors on lampposts monitor air quality and water levels, count electric scooters in public places, and collect footfall data to support urban and transport planning.

Similarly, governments will need to bolster their data management capabilities, as the adage “garbage in, garbage out” rings especially true for AI. Ensuring the availability of accurate and reliable data is essential for AI tools to deliver the correct decisions. Sourcing appropriate data sets not just from within an organization but also externally should be high on the list of priorities for governments so they can “train” AI algorithms appropriately.

Governments need to focus their attention on educating citizens, creating transparency, and putting in place programs and policies to support the rollout of AI in government. They also need to begin adopting AI in a thoughtful way, soliciting feedback from citizens in the process to help build citizen support for AI.

Artificial Intelligence holds great potential for governments as they seek to enhance the efficiency and impact of the services they deliver. However, as with any changes to the way policy making is executed or services are delivered, citizens may have concerns about the changes, particularly when they are being driven by complex, rapidly evolving technologies that may be hard to understand and for which the implications and unintended consequences are as yet unknown. For this reason, governments need to continue to move forward but also tread carefully when looking to harness the power of AI. Transparency into where and how AI will be used in government will be essential to establishing the legitimacy of the technology in citizens’ eyes and to mitigate their concerns about any negative effects it might have on their lives. Given the speed of technological change, this work must be done sooner rather than later. The good news is that there are many areas where citizens understand the efficiency benefits of the technology. However, if governments want to secure public support, they need to implement AI applications rapidly and unlock its potential, while simultaneously building trust and creating mechanisms to increase transparency. For governments, as for many organizations, the digital era could be transformative—but only if they are able to bring their citizens with them on the journey.