An artificial intelligence (AI) software development team at a major manufacturing company is assigned to produce a new program for internal operations. It uses data analytics to help reconfigure the firm’s supply chains on the fly. Top leaders have high hopes for this project. It follows the “ human plus AI ” paradigm, in which algorithms are used to augment rather than replace managerial decision making. If the project can be released quickly, it will demonstrate this company’s competitive prowess and help with recovery from the COVID-19 crisis.

But after a strong start, momentum slows down. No one quite knows why. The original two-month deadline extends to four months, and then to eight. When the program finally passes through its long string of approvals and spec changes, no one can remember what the early enthusiasm was about. The new tools don’t fit easily with existing IT systems, and they are never widely adopted.

Stories like this are all too common. Companies have given data analytics an enormous amount of attention and investment during the past several years, but specific AI projects tend to fall short of their potential. Very few scale up to the entire organization. In fact, according to a recent study by MIT Sloan Management Review and BCG, just two out of five companies that have made investments in AI report obtaining any business gains.

This is a particularly crucial issue right now because every dollar counts, and AI investments are vital for adapting to new ways of working. Business leaders must therefore improve their returns from AI projects. You might think that the answer lies with better technology and even more talent. But the more likely solution is organizational. BCG’s experience with more than 500 analytics projects in the public and private sectors demonstrates that the key factors for delivering AI products successfully—with speed to market and stable software—are mainly related to team dynamics.

The key factors for delivering AI products successfully--with speed to market and stable software--are related to team dynamics.

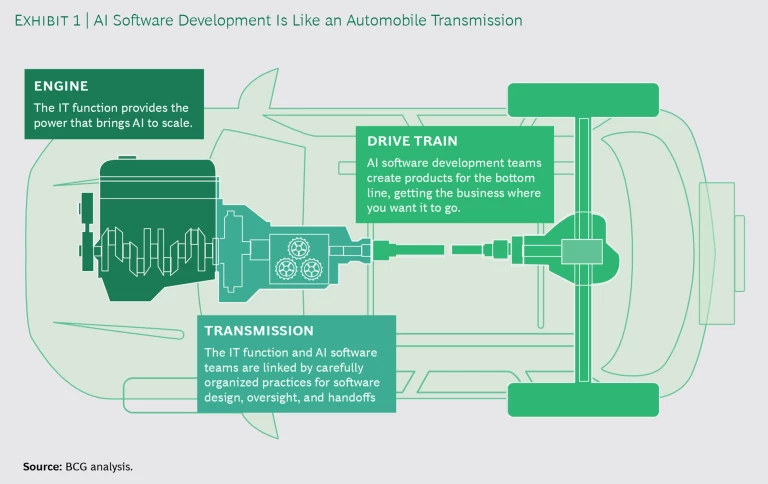

AI Software Development Is Like an Automobile Transmission

To resolve these issues, one can think of AI software development like the transmission of an automobile. The vehicle’s engine is the source of power and control, while the transmission connects the engine to the drivetrain and wheels, coordinating the momentum of the vehicle. Transmission problems impede this coordination, and the reasons for the breakdown usually aren’t immediately clear. (See Exhibit 1.)

The AI development process is prone to similar breakdowns. Coordination is vital, particularly between two technological groups. On one side are the IT functional leaders—we call them cloud architects—who oversee the company-wide digital cloud infrastructure on which AI innovations are developed and launched. On the other side are the project-based analytics team members: the data scientists and data engineers who work closely with business leaders to translate their priorities into code.

The cloud architects tend to prioritize stability, so that the product fits well with the rest of the enterprise system and does not require too much technical maintenance. The data scientists and engineers are more oriented to velocity. They often work in agile teams, with tight deadlines that spur rapid innovation. They expect to release their apps and bots in beta form, refine them on the basis of early responses, and cut their losses if the early releases fail to prove their value.

The “transmission” that connects these two groups consists of practices and processes that have emerged with the rise of AI projects during the past few years. When these practices work well, the company consistently leverages the value of its IT investments. The intended outcomes that may have occurred relatively rarely in the past—such as broad access to organized data, apps deployed across the cloud infrastructure, and automated analytics products that scale to the entire organization—now become routine.

But all too often, these practices fall short. It’s like having a faulty transmission: the engine spins, but the wheels get no power. Only by bringing the IT group and data analytics together, in a well-coordinated way, can you get your AI projects on the road.

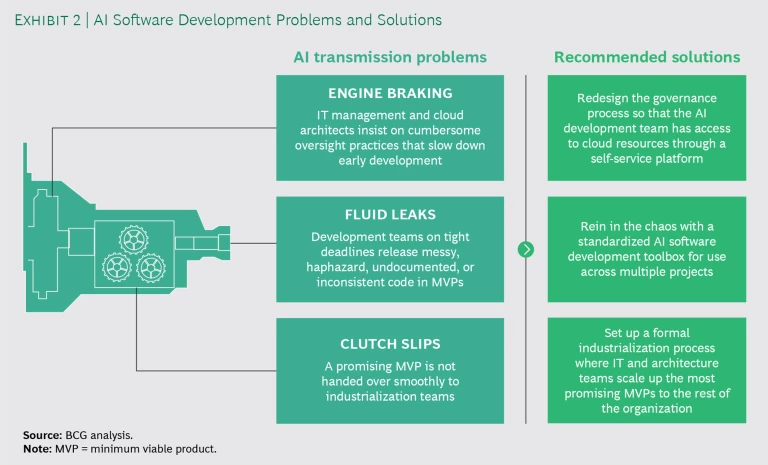

When a car’s transmission starts to fail, it may go unnoticed for a while—until there is a costly breakdown. Similarly, when the AI development processes start to fail, it may become apparent only after a few weeks or months, when the release slips. The solution is to look for three tell-tale indicators of early challenges faced by AI projects that are typically overlooked or ignored. They can all be compared with common transmission problems. (See Exhibit 2.)

The first challenge, delays from cumbersome oversight and approval practices, is like engine braking: a useful technique becomes problematic when overused. The second challenge, unstable software design because of lax standards, could be compared with fluid leaks: a warning sign of inadequate tool management. And the third challenge, poorly coordinated handoffs that don’t quite connect, resemble the problems that occur when the clutch slips.

Fortunately, preventive measures can help companies avoid these three major challenges. They all involve shifts in organizational design and habitual management practice that will make the technology itself far more effective. With these measures in place, there should be no tradeoff between the integrity of the cloud and the business value of any AI-related project. These recommendations also complement other steps that our in-depth studies have shown to be key to success, such as investing in talent.

Cumbersome Oversight Practices: Engine Braking

In the early stages of AI product development, the cloud architects of the IT function often find themselves slowing down the process. Since they have to field requests from many parts of the company, they act as gatekeepers to cloud infrastructure. They must ensure that the projects, including the code, fit the requirements of the company’s platform and don’t waste resources or clash with other initiatives. When done appropriately, this is like downshifting to slow down a vehicle: using the engine’s controls instead of the regular foot brake.

But too much downshifting can damage the engine. In AI software development, cumbersome and heavy-handed approval processes can similarly become problematic and add weeks of delay. For example, there may be an unnecessary level of documentation required for algorithms that are still changing under development.

The solution is not to remove the cloud architects’ governance role. Without it, the new AI programs would be isolated and accident prone. Instead, the answer is to redesign the bridging process between both groups so that stability and speed can be priorities.

Our experience demonstrates that the most effective solution is to set up a self-service platform for basic cloud resources. Teams could begin deploying prototypes during the first weeks of development and test cloud-related configurations from the start. A benefit of this option could be to free up time for cloud architects, who are typically in high demand. But to gain the enterprise’s support, the self-service platform would need to be set up in a transparent fashion, giving data scientists and engineers sufficient structure and enough guidance so that they would be trusted to protect the full organization’s IT infrastructure, no matter how innovative their projects may be.

Unstable Software Design: Fluid Leaks

As they sprint toward a new software product, data scientists and engineers often start by producing a minimum viable product (MVP), just good enough for alpha release, with tight deadlines and an urgent demand for business value. MVPs are often known for messy, haphazard, undocumented, or inconsistent code. Technical sloppiness opens the door to security risks, which can go on to infect broader infrastructure. A fragmented AI ecosystem also introduces operational drag that will further reduce speed and efficiency. Like fluid leaks in a vehicle transmission, these problems can seem small and inconsequential at first. But when multiplied across dozens of AI bots and apps, they can cause a great deal of instability and internal damage.

The answer is to rein in the chaos by adopting a standardized toolbox of technical components implemented as code. These would include infrastructure interfaces and lower-layer modules in addition to other fairly standard components, such as a data pipeline, one or more application programming interfaces, and a front-end interface. These components could perhaps be implemented in the cloud architecture, as a managed service by a cloud provider. However, that would require a cloud architect to join the design and implementation teams, which may not be possible.

A still better solution may be to set them up as shareable code—for instance, in a Python library. Under this approach, development teams adopt a standardized framework of lightweight code libraries for common AI components. This keeps the code quality high and the architectural overhead low. It also fits in with the typical toolkit of data scientists, who are accustomed to working with a standard set of open-source Python libraries. This common set of libraries must be continually updated, to keep current with the ever-expanding innovation of the enterprise, and that means paying more attention to collaboration, documentation, training, and team management. The stakes are higher now.

With the use of open-source tools—such as BCG GAMMA’s Laminar (for data pipelines) and DAIS (for front-end dashboards)—and templates for codebase structures, data scientists can easily learn the best practices in scalable AI product development. They can also be more creative and innovative in their remaining work, since much of the basic operations will already be covered.

Poorly Coordinated Handoffs: Clutch Slips

After an MVP is first released, product development typically changes gears, moving to an industrialization phase. This transition is vital for aligning AI products with broad IT initiatives, such as data stewardship, and cloud-native architecture.

But handoff processes are often poorly planned and coordinated, leading to prolonged and painful engagements. The industrialization phase is particularly sensitive. Personnel often change as entire development teams rotate in and out—all while the product is live and in the hands of early users.

In the worst case, a handoff can involve explaining every line of code and design decision in videos—a codification process that might add months to the schedule. A handoff process implemented in this ad hoc manner is the organizational equivalent of a slipped clutch; the whole project loses momentum.

One viable alternative is a formally organized industrialization process, with the same “factory line” approach for all AI projects, perhaps designed by project leaders from across the business units. This ensures that all the teams use the same basic development tools and follow the same guidelines for project milestones and deliverables. Tools and components are scaled up from code implementations to infrastructure implementations, boosting performance and saving money on cloud resources. Data scientists and engineers, moving from project to project, don’t waste their time reinventing the wheel, and cloud architects are more likely to trust their offerings because the tooling set and many of the practices are standardized.

A formally organized industrialization process ensures that all the teams use the same basic development tools and follow the same guidelines for project milestones and deliverables.

Under this approach, a standardized handoff routinely occurs at the end of the MVP phase, when the product is in front of users and the project owners have concluded that it provides enough value to continue development. After that point, every role is defined in replicable steps, including the signoffs by business and infrastructure leadership. Just as with a well-working automobile transmission, no one need worry about the project becoming disengaged.

Establishing a New Way of Working

The processes described here can help establish trust among all the people involved. Cloud architects, data engineers, and project owners will now be guided, in their everyday processes, to support one another’s priorities as well as their own. With these routines in place, continually updated and refined, there is no tradeoff between stability and velocity.

But putting these routines in place will require time and attention. Because AI projects are new in so many companies, the product development processes for them have often evolved on an ad hoc basis. Now they must be explicitly replaced. Once the new routines become second nature, however, the company will have an edge over its competitors.

AI product development is hard because most companies aren’t set up properly to handle its multidisciplinary nature. The practices described here can help overcome conflicts of interest by defining an operating model for the development process as a whole. This comprehensive operating model allows companies to see more impact on the bottom line from their longstanding investments in IT, data governance, and analytics. Creative, rapid, and highly effective AI can become the rule, rather than the exception.