In the digital age, a company is only as healthy as its IT systems.

In the digital age, a company is only as healthy as its IT systems. Traditionally, IT monitoring was a tool to ensure that IT services were operating effectively. Now, as companies become more digital, the monitoring function safeguards the entire business—accelerating time to market, lowering costs, reducing risk, and more.

But creating an effective IT-monitoring system is difficult, especially for the many companies operating in a hybrid cloud environment. They will have to monitor multiple public and private clouds plus legacy on-premises infrastructure, with thousands of highly diverse subsystems.

Companies that seek to unlock the very substantial benefits of effective, modern IT monitoring face three major challenges:

- Creating a “single pane of glass” that gives a true overview of the complicated, interconnected IT environments that most companies now operate

- Making the best use of the wealth of data produced to drive effective outcomes and deliver ROI

- Creating a new culture in which IT monitoring solves whole-business problems, with managers from outside of IT using the output of a monitoring system to drive digital success

These challenges shouldn’t be underestimated. However, as we outline below, there are routes to success, and because the benefits of modern monitoring are so substantial, companies should embark on them with urgency.

THE NEED FOR A SINGLE PANE OF GLASS

A Complex New Vista to Monitor

Most large organizations today are in a hybrid cloud environment. In recent BCG research with 112 CIOs, 66% said they use two or more public clouds; 21% said they use at least two private clouds. In the near to mid term, the hybrid cloud will be the prevailing deployment model.

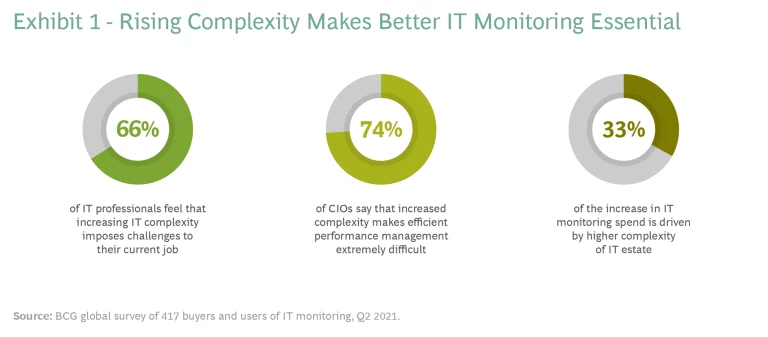

For this article, we did in-depth research on IT monitoring with 417 IT professionals. Most—74%—said increased complexity makes efficient performance management difficult. The CTO of a major European company told us, “Our IT estate is becoming more and more complex. Thus, it’s hard to predict where issues are going to come from, which increases our need for IT monitoring.” (See Exhibit 1 to see the impact of increased complexity.)

In a sense, the complexity that companies find themselves confronting is counterintuitive. After all, moving legacy software to the cloud was supposed to make systems more robust and easier to monitor. Cloud-native software that is built using microservices should be more robust still—almost self-healing.

The occasional outages that were a nuisance five years ago are now a real business threat.

But while it is true that individual components are now more reliable and resilient than ever, the complexity of the modern hybrid cloud environment has created new and hard-to-predict modes of failure and underperformance. It has also led most firms to use a profusion of monitoring tools, each producing slightly different metrics for different parts of the infrastructure.

Adding to the challenge is the need to monitor Software-as-a-Service offerings. For example, if your company needs Office 365 or Salesforce to function, your monitoring systems need to watch those too, reacting fast if they detect performance issues.

In addition, the costs of downtime have risen as companies have become increasingly reliant on their digital functions . And, in addition to the per-minute loss of revenue, reputational damage and customer attrition mean that the occasional outages that were a nuisance five years ago are now a real business threat.

The importance of monitoring is shown by rising spending. IDC estimates that the global market for commercial IT monitoring grew at a compound annual rate of 11.2% between 2018 and 2020 while the total IT market grew by less than 3%. A major IT-monitoring vendor recently posted annual revenues up more than 30% in a year.

CIOs have told us that the pandemic has pushed IT monitoring higher on their agenda. The cost of downtime has increased in parallel with the need for greater agility in business and delivery. An executive at a major retailer said, “Through COVID-19, we realized the need to have good monitoring systems. It has accelerated timelines and investments.”

Solving the Complexity Challenge

The complexity of the hybrid cloud environment leads to the first challenge we identified, the need to create a single-pane-of-glass view that spans the entire infrastructure.

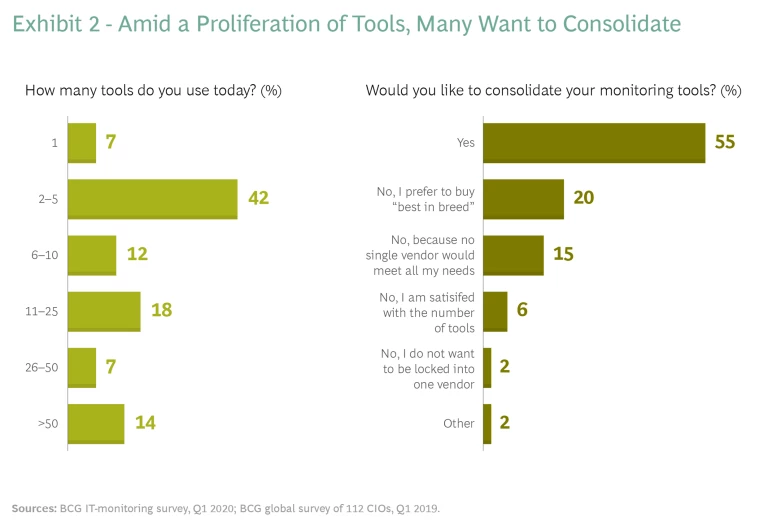

Many organizations are a long way from this ideal at present, using a bewildering profusion of monitoring tools, as detailed in Exhibit 2. In our survey on monitoring, we found that 14% of organizations are wrestling with more than 50 tools. They tended to be those with complex hybrid cloud environments.

Traditional IT-monitoring vendors have long acknowledged that users want to consolidate tools. Now, cloud vendors themselves understand this requirement and offer tools that manage and monitor systems from other vendors as well as their own.

The simple way to get this overview is to route the output from multiple monitoring systems into a single dashboard. But the more powerful, strategic option is to create a single data repository that holds technical and business performance data. Using this single repository, plus machine learning, organizations can quantify the correlations between commercial outcomes and IT performance—a brand-new capability. A retailer, for instance, could quantify whether faster page loads keep customers on the site longer and whether that leads to more purchases of additional products. With this knowledge, the retailer could assess the return on IT investment.

At this point, IT-monitoring systems have earned the name “observability platforms,” indicating that organizations can now, for the first time, get a clear picture of how their IT system supports and interacts with the whole business, providing the insights needed for continuous improvement in the digital enterprise.

THE DATA DELUGE

Upgraded Monitoring

Because companies expect more from their monitoring, vendors are improving their products. A significant shift is that tools can do much more than alert a company to a failure. They consume data from all layers, including network, infrastructure, and applications, and they use that data to warn about subsystems running close to overload so that extra resources can be allocated before failure occurs. They can also suggest optimization and identify underused resources that can be decommissioned to save costs.

Alongside the proliferation of products come critical technical improvements.

The move to second-generation performance monitoring started about 15 years ago, with the advent of application performance management (APM), a response to the rise in complexity created by service-oriented architectures. APM embeds additional tracking code into applications to report their status to the monitoring platform, giving further insight into the performance and the resources consumed. However, if overused, this extra code creates a drag on performance. We think APM is a must-have technology, but skill is needed to generate a return on its investment.

Another significant development is digital experience monitoring, sometimes known as end-to-end monitoring. This aims to evaluate the customer experience, not just the health of the central infrastructure. Using this kind of technology, a company offering e-commerce services across Europe can get insights from real customer traffic and also simulate customer interactions from Germany, France, and other major markets to understand performance from a client viewpoint rather than just monitoring performance at its central hub.

Making Use of the Data

All the enhanced capability, however, leads to the second challenge we identified: making use of the vast amounts of data.

This challenge is intensified by modern monitoring systems that measure business activity as well as technical outcomes and performance.

For instance, a system monitoring an e-commerce site could understand typical ordering patterns based on times, dates, public holidays, and other data. Even if all the technical checks show that systems are working fine, the system should alert if order volumes are very different from those expected.

When we spoke to CIOs about digital experience monitoring, 68% said they face significant challenges.

For customer-facing digital businesses such as retailers and banks, we believe collecting this kind of data—and acting on it—is essential.

However, when we spoke to CIOs about digital experience monitoring, 68% said they face significant challenges that include identifying which improvements boost customer engagement and assessing how incremental changes add to the overall customer experience.

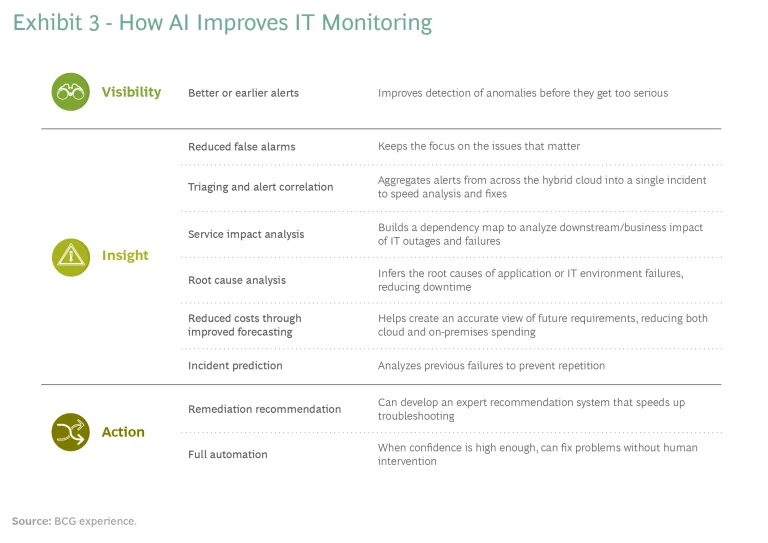

AI and machine learning are significant new additions to monitoring, and they help to bridge the gap between data and action, as outlined in Exhibit 3.

They offer:

- Predictive maintenance and monitoring; identifying, on the basis of usage trends and capacity limits, a potential failure before it occurs

- Prioritized alerts, designating those that need to be escalated for action and those that can be logged for later

- Postfailure problem solving to reduce downtime; this is a significant benefit in the distributed microservices environment, where even identifying which component has failed can be a challenge

These new technologies, combined with cloud-native functionality, offer such enhanced features that they constitute a new, third-generation monitoring system.

CULTURE CHANGE

A Fast-Changing Vendor Landscape

Each new generation of monitoring software has spawned a new cohort of vendors, and the shift to third-generation monitoring is no exception. Legacy, first-generation players, in particular, are losing market share as businesses realize the immense value of more modern solutions. The rise of the third generation is also triggering a wave of consolidation; large vendors are buying specialist providers to gain extra functionality quickly. One vendor has made more than ten acquisitions in just a few years.

Many organizations wonder whether they should migrate to an open-source environment for monitoring.

At this point, many organizations wonder whether they should migrate to an open-source environment for monitoring. Certainly, open-source solutions such as Prometheus, Elastic, and Graylog are improving. In our research into IT monitoring, 50% of companies said they were either increasing their use of open-source tools for monitoring or planning to adopt them.

However, skilled engineers are required to implement, integrate, and operate open-source solutions. So, open source may be part of the mix, but most companies will entrust the bulk of their monitoring to commercial vendors, which are not only easier to use but may be available as a SaaS model to reduce upfront costs.

Creating a New Culture

We have left discussion of perhaps the greatest challenge until last. The rapid increase in monitoring functionality, plus the ever-increasing complexity of most companies’ IT environment and the importance of IT to the business, means that CIOs need to rethink their approach to monitoring. As they do this, they must bear three imperatives in mind.

Break down the walls in technology. Traditionally, monitoring was a tool for infrastructure teams. Although the rise of DevOps and the move to APM has brought awareness of monitoring needs into the development process and engineering teams, awareness needs to be expanded still further. Today, monitoring is an issue for the whole IT function, with all groups feeding into the process and making maximum use of the data that emerges.

It’s also vital to talk to IT security, whose systems have substantial functional crossover. If you have teams deploying machine learning in a production environment, it is important to engage these too. Tracking “data drift” that causes machine-learning models to degrade is not yet a feature of IT monitoring, but this capability too should one day be visible through the single pane of glass because data drift damages the customer experience just as much as a software bug or a system outage.

Break down the walls outside, too. The critical silo to break with IT monitoring is the one that surrounds IT and separates it from the rest of the business. This is not to imply that product managers and business leaders should be checking detailed metrics related to system performance. But they should be watching a dashboard with customer-centric and commercial metrics.

To capture these benefits, non–IT managers must use their new tools with enthusiasm. So, it is essential to bring them into the process as early as possible. Talk early and often about benefits such as quantifying the ROI of infrastructure investment for the first time and asking how better monitoring could facilitate digital transformation . If business teams are engaged only when plans are advanced and vendor dialogue has begun, the opportunity for the deepest buy-in has been missed.

Think big. It is clear that moving to state-of-the-art monitoring offers very substantial benefits for any digital business—but also that the journey could be a long one. Don’t be daunted.

Step-by-step change may look attractive to companies whose map of monitoring systems currently looks like a plate of spaghetti. But as we have discussed, there are real benefits to taking a big-picture approach, simplifying the monitoring environment, and winnowing the excessive number of tools they currently use. How long can you forgo the higher-level benefits of a single pane of glass? How long do you want to wait until you discover, for the first time, which IT investments will make the most significant difference?

TIME FOR FRESH THINKING

Transitioning to a monitoring platform that delivers a clear and holistic picture of the IT function is complex and will require a great deal of fresh thinking, both inside and outside IT. Companies need to act now, taking a holistic approach to rethinking their monitoring strategy. An effective IT-monitoring strategy is a must-have for any organization that wants to be agile and resilient in the digital age.