This is the first of three pieces that build on our 2019 report, The Dawn of the Deep Tech Ecosystem . It is based on the new report, Deep Tech: The Great Wave of Innovation .

Get ready for the great wave—the next big surge of innovation powered by emerging technologies and the approach of deep tech entrepreneurs. Its economic, business, and social impact will be felt everywhere because deep tech ventures aim to solve many of our most complex problems.

The great wave encompasses artificial intelligence (AI), synthetic biology, nanotechnologies, and quantum computing, among other advanced technologies. But even more significant are the convergences of technologies and of approaches that will accelerate and redefine innovation for decades to come.

As technological advances move from the lab to the marketplace, and as companies form to pursue commercial applications, we see a number of similarities in how and why they are being developed—and a powerful ecosystem is taking shape to drive their development. We witnessed the power of that ecosystem in the year just ended, as Moderna and the team of BioNTech and Pfizer separately took two COVID-19 vaccines from genomic sequence to market in less than a year. Although these companies did remarkable work at unheard-of speed, they benefited from the work of many others, including governments, academia, venture capital, and big business. All of these are critical players in the coming wave.

This article looks at how the great wave in deep tech is taking shape. Subsequent articles will examine how the revolution in nature co-design is taking shape and how the funding of emerging technologies will underpin their future. Along the way, we will answer some key questions:

- What makes deep tech different?

- What makes deep tech run?

- How does deep tech work?

- What are the main challenges for deep tech ahead?

Fasten your seatbelt and get ready to take a ride in the equivalent of a flying car (no fewer than seven are now under development).

The Deep Tech Difference

Deep tech companies are a disparate group. A few, like Moderna, are already household names. Some, such as SpaceX and Blue Origin, have captured the public imagination. Others deal in what was once pure science fiction (such as those flying cars). Still others work in fields that few people can describe—synthetic biology and quantum computing are two—where long-term solutions to diseases, climate change, and other problems are in active development.

Successful deep tech ventures bring together multiple talents (including scientists, engineers, and entrepreneurs) to solve a problem. Often they develop brand-new technologies because no existing technology fully solves the problem at hand. In some instances, though, success depends on developing new applications for established technologies. For example, Boom Supersonic is working on a supersonic plane using only technologies with known certification paths and proven safety records. And Seaborg Technologies is working on building modular floating nuclear power plants powered by compact molten salt reactors, a decades-old technology.

Successful deep tech ventures tend to have four complementary attributes:

- They are problem oriented. They focus on solving large and fundamental issues, as is clear from the fact that 97% of deep tech ventures contribute to at least one of the UN’s sustainable development goals.

- They operate at the convergence of technologies. For example, 96% of deep tech ventures use at least two technologies, and 66% use more than one advanced technology. About 70% of deep tech ventures own patents in their technologies.

- They mostly develop physical products, rather than software. In fact, 83% of deep tech ventures are engaged in building a physical product. They are shifting the innovation equation from bits to bits and atoms, bringing the power of data and computation to the physical world.

- They are at the center of a deep ecosystem. Some 1,500 universities and research labs are involved in deep tech, and deep tech ventures received some 1,500 grants from governments in 2018 alone.

Despite representing a small minority of startups, deep tech ventures have an outsize impact because they attack large-scale issues and because their work is both

futuristic and practical

. Deep tech ventures reside in what Donald Stokes termed “Pasteur’s Quadrant,” combining a quest for fundamental understanding with applied

There Is No Such Thing as a Deep Technology

It’s the wrong question—because there is no such thing as a deep technology. Instead, deep tech describes an approach enabled by problem orientation and the convergence of approaches and technologies, powered by the design-build-test-learn (DBTL) cycle. As Clayton Christensen, who developed the theory of disruptive technology, noted, few technologies are intrinsically disruptive or sustaining in and of themselves; rather, the application and the business model built around or through the technologies are disruptive. The same idea applies to deep tech.

Deep tech startups question basic barriers, obstacles, and blind spots in the current approach to problem solving. They rely on emerging technologies rooted in science and advanced engineering that offer significant advances over established technologies. In fact, 70% of deep tech ventures own patents that cover the technology they use, and these usually require significant R&D and engineering before companies can bring practical business or consumer applications from lab to market and use them to address fundamental problems.

The novelty of technologies and the ways in which they are used make the deep tech approach possible and provide the power needed to create new markets or disrupt existing industries. Reliance on emerging technologies defines two additional characteristics of the deep tech approach. First, it takes time to move from basic science to applications to actual use cases. The amount of time required varies substantially by use case, but it almost always takes longer than an innovation based on an available technology or existing engineering approach. Nevertheless, as technologies rapidly converge and barriers to innovation fall, the development time for deep tech solutions is dropping fast.

Second, deep tech demands continuous investment from conception through commercialization and often has intensive capital requirements. Companies can reduce the technology risk and the market risk by adopting the DBTL approach and by ensuring that the deep tech venture focuses on problem solving. Often public and private funds and resources are essential for full development, particularly in the early stages. Here, too, the convergence of exponentially progressing technologies and the diminishing barriers to innovation are making the deep tech approach more and more accessible.

Despite the inherent risks of failure, businesses and investors have shown increasing interest in deep tech. According to our preliminary estimates, investment in deep tech (including private investments, minority stakes, mergers and acquisitions, and IPOs) more than quadrupled over a five-year period, from $15 billion in 2016 to more than $60 billion in 2020. The average disclosed amount per private investment event for startups and scale-ups rose from $13 million in 2016 to $44 million in 2020. For early-stage startups, the most recent survey by Hello Tomorrow found that the amount per investment event increased from $36,000 to $2 million between 2016 and 2019.

And funding sources are expanding. While information and communications technology (ICT) and biopharma companies continue to invest substantially in deep tech, more traditional large enterprises are becoming increasingly active. For example, Sumitomo Chemical has signed a multiyear partnership with Zymergen to bring new specialty materials to the electronics products market, and Eni has invested $50 million in Commonwealth Fusion Systems and joined its board of directors. Bayer has joined forces with Ginkgo Bioworks to reduce agriculture’s reliance on carbon-intensive nitrogen fertilizers. The resulting venture, Joyn Bio uses synthetic biology to engineer nitrogen-fixing microbes that enable cereal crops to extract nitrogen from the air in a usable form. Sovereign wealth funds are playing too. Singapore’s Temasek Holdings invested in JUST (plant-based egg alternatives), Commonwealth Fusion Systems (commercial fusion energy), and Memphis Meats (animal-cell-based meat).

More and more mainstream companies and institutions are recognizing that solutions to big problems—and the future of innovation—lie in deep tech.

The Fourth Wave of Innovation

The first wave of modern business innovation started in the nineteenth and early twentieth centuries with breakthroughs such as the Bessemer process for manufacturing steel and the Haber-Bosch process for making ammonia.

Following World War II, the second wave of modern business innovation—the information revolution—gave birth to large-company R&D, particularly in the ICT and pharma sectors. Bell Labs, IBM, and Xerox PARC became household names and Nobel Prize workshops. Merck alone launched seven major new drugs during the 1980s.

In the third wave, the digital revolution, two guys in a garage (or a Harvard dorm room) led the innovation charge, which resulted in the rise of Silicon Valley and, later, China’s Gold Coast as global centers of computing and communications technology and economic growth. At the same time, the new field of biotech, also driven by entrepreneurs, fueled much of the innovation in pharmaceuticals.

The wave now taking shape as older barriers to innovation crumble embraces a new model and promises to radically broaden and deepen innovation in every business sector. The increasing power and falling cost of computing and the rise of technology platforms are the most important contributors. Cloud computing is steadily improving performance and expanding breadth of use. Biofoundries are becoming for synthetic biology what cloud computing already is for computation. Similar platforms are emerging in advanced materials (Kebotix and VSPARTICLE are two examples).

Meanwhile, costs continue to fall, including those related to equipment, technology, and access to infrastructure. Increasing use of standards, toolkits, and an open approach to innovation, paired with the ever-increasing availability of information and data, plays an important role as well.

Powering the Great Wave: The Deep Tech Approach

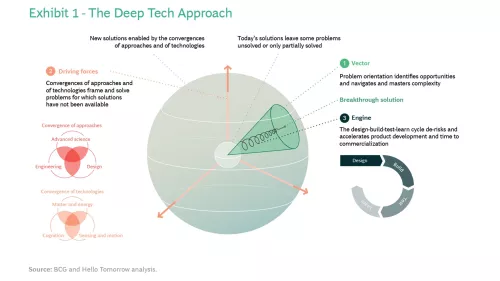

Successful deep tech ventures rely on a threefold approach (see Exhibit 1):

- They use problem orientation to identify opportunities and to navigate and master complexity.

- The convergences of approaches and of technologies power innovation, broaden the option space, and solve problems for which solutions have not previously been available.

- The design-build-test-learn cycle (DBTL) de-risks and speeds product development and time to commercialization.

Problem Orientation. By addressing complex and fundamental problems, deep tech ventures target high-impact opportunities. Rather than relying on known or established solutions, they draw their inspiration from design thinking. Ventures then find the best technologies to solve such problems.

Problem orientation also serves a technical purpose in a deep tech venture, shaping the venture’s operations, organization, and market strategy. It helps the venture remain purpose driven and outcome oriented and enables it to develop the right operating system, which can be crucial for scaling. Setting purpose through problem orientation helps ensure talent retention, global momentum, and a coherent dialogue among multidisciplinary teams.

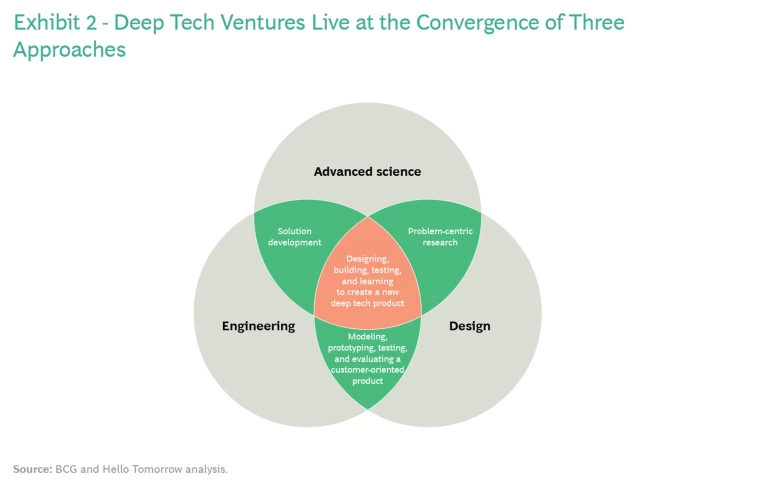

Converging Approaches. The convergence of approaches is another prerequisite for deep tech and a key enabler for companies combining a quest for fundamental understanding with applied research. (See Exhibit 2.)

It starts with design, or problem solving, through interdisciplinary context analysis, problem finding and framing, and idea generation. Advanced science provides the theory that underpins the solution. Engineering ensures technical and commercial (or at least economic) feasibility. But what sounds like a linear process actually happens in parallel, and therein lies much of the challenge of deep tech innovation. Science and engineering must be at the table from the very beginning of the problem solving. Their depth and competence powerfully influence the solutions that emerge.

A good example of converging approaches is Cellino, which brings together a clear problem orientation (making regenerative medicine possible), science (stem cell research), and engineering (turning adult cells into stem cells). Another example is Ginkgo Bioworks, which applies a strong scientific capability to its work in organism design. The company looks for problems in areas where the science has high potential and builds businesses around them. It often works in partnership with investors or corporations to overcome engineering and operational hurdles. In addition to its joint venture with Bayer in microbial fertilizers, Ginkgo has created a company to develop food ingredients (Motif), and it has partnered with Batelle and other strategic investors in bioremediation (Allonnia).

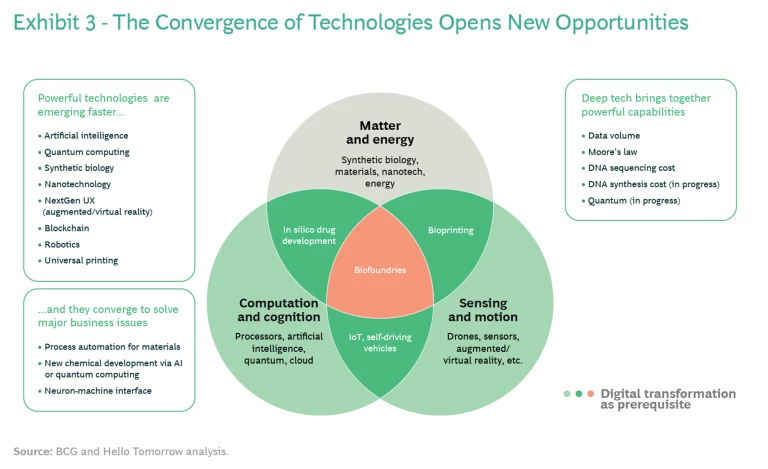

Converging Technologies. Computation and cognition are shaping the world and, in combination with sensing and motion, have led to such advances as autonomous vehicles, the Internet of Things, and robotics. Now, advances in gene sequencing, editing, and writing, as well as in nanotechnology, are clearing a new path for innovation. Researchers are starting to develop tools to design and produce inventions at nanoscopic scale (a nanometer is a billionth of a meter—the size at which fluctuations in individual particles can affect the behavior of systems), enabling companies to leverage nature for product design. This capability has deep implications on its own—but when added to technological capabilities in matter and energy, computation and cognition, and sensors and motion, it allows innovative companies to address previously unsolvable problem sets. (See Exhibit 3.) This is also a defining attribute of deep tech.

Consider the implications in just one field: biology. The ability to use AI and bioprinting to predict the folding of proteins will revolutionize drug discovery and medicine. Ginkgo Bioworks and other biofoundries, which sit at the intersection of the three dimensions, use AI to design genetic constructs and then rely on robotic process automation to build and test them, leading to incredible advances in organism programming. Similarly, Zymergen uses biofabrication techniques that involve advanced AI, automation, and biological engineering to create never-before-seen materials. And Cellino is combining stem cell biology, laser physics, and machine learning in its work on scaling the transformation of adult cells into stem cells.

In the field of physics, where matter and energy join the innovation equation, Commonwealth Fusion Systems aims to accelerate the path to commercial net-gain fusion energy by confining fusion-grade plasmas with strong magnetic fields, unlocking the potential for limitless, clean energy.

The consequences of technological convergence are very different for startups and more-established players. Deep tech startups use their problem-solving orientation to combine emerging technologies in ways that make the previously impossible possible, often at an exponential pace. Established players often struggle with this dynamic—for example, in embracing digital technologies, let alone dealing with the convergence between computing and cognition and sensing and motion. Adding another converging technology to the mix is often more confounding than clarifying in terms of setting a future direction for R&D.

Design-Build-Test-Learn. If converging approaches and technologies inform the deep tech approach, the design-build-test-learn (DBTL) engineering cycle is the engine that drives it. DBTL provides the bridge between the problem to be addressed and the science and technologies to be put in place. In fact, the problem orientation is a prerequisite for DBTL, since every iteration in the DBTL cycle involves measuring its contribution toward solving the problem at hand.

The benefits of DBTL are similar to those that the lean build-measure-learn loop provides (such as speed and agility), but DBTL introduces several major advances. First, deep tech’s impact on process speed is massive—often orders of magnitude more. Whereas the build-measure-learn loop occurs in the world of bits (that is, software), the DBTL cycle happens in the world of bits and atoms. It includes technologies such AI, quantum-inspired algorithms, advanced sensing, robotics, and additive manufacturing. Bringing together these technologies leads to step changes in capability. For example, in early demonstrations of its tech platform, Kebotix, an advanced materials and robotics company, reduced the development time for OLED (organic light-emitting diode) materials from 7 years to 1.5 years.

Second, the multidimensional design phase of the DBTL cycle is a source of competitive advantage. Each step in the design cycle has its own peculiarities, and the supporting technologies evolve swiftly. Frequently, processes that work in the lab do not work at scale, so deep tech ventures must allocate time and resources to ensure that the minimum viable product (MVP) that they design extracts the maximum value out of the cycle and can advance at the maximum viable speed. Commonwealth Fusion Systems, for example, chose to use high-temperature superconducting magnets rather than plasma physics for its MVP because plasma physics was unsuitable for fast and reliable application of the DBTL cycle and would have held back overall advancement.

Third, DBTL in deep tech, as in software design, plays a de-risking role—but in deep tech, the de-risking goes far beyond market risk to apply to the entire venture. In each iteration of the product, starting with the initial MVP, the deep tech DBTL cycle serves as the main de-risking instrument, and each new version must carry a certification of the retired risk. Every successful iteration through the DBTL cycle thus represents a milestone in the development of the venture and is relevant not only for management but for investors and all other stakeholders involved.

Here’s how each stage of DBTL works in a deep tech context.

The core of the innovation process is the design stage, where much of the value is created. Here, faster access to information and cheaper and more powerful computing equipment accelerate a hypothesis-driven process. In the past ten years, a massive increase in available information, combined with faster and more open-source access, has fostered collaboration and open innovation. Faster, more affordable, and more specialized computing equipment simplifies the task of designing models. Engineers can scan prototypes and equip them with sensors to provide real-time performance data that loops back into the design process, enabling the object to co-design itself.

For example, Airbus relied on generative design techniques using Autodesk software, advances in material science, and 3D printers to design an airplane partition panel that is twice as light as previous designs, reducing fuel consumption and CO2 emissions.

Similarly, designers can use augmented and virtual reality tools to devise a product without having to physically build it, reducing the number of physical prototypes needed and increasing the precision of each iteration. This approach simultaneously lowers building costs and improves product design. As advanced technologies become more accessible, more people will be able to participate in the design phase, even if they do not have extensive scientific backgrounds.

In the future, quantum computers will possess vast calculation capabilities, processing enormous amounts of information and executing some algorithms exponentially faster than classical machines. This will open up new possibilities for computation-based endeavors. Quantum computing is likely to have a major impact in such fields as biopharma, chemicals, materials design, and fluid dynamics . Its power is already at work in the form of quantum-inspired algorithms. The French startup Aqemia claims that its quantum-inspired algorithm enables it to identify the most suitable binding molecule for drug development 10,000 times faster than is possible through conventional approaches. Similarly, London-based startup Rahko is building a quantum chemistry platform that can simulate materials to discover and develop new molecules with unparalleled speed and accuracy—and at greatly reduced cost.

During the build and test stages, companies can achieve huge economies of scale, high speed and throughput, and much improved precision, thanks to advances in platforms and robotic process automation and a continuing decline in costs. Large communities of users harness and contribute to emerging platforms in multiple deep tech fields, giving even small startups scale and access capabilities that would be too costly, time consuming, or technologically challenging to develop in-house. Companies can use cloud computing platforms, synthetic biology materials platforms, and shared spaces to build and test designs. Robotics process automation transfers the testing process from humans to bots and automates it. Testing runs 24-7, produces fewer errors, and can incorporate multitasking elements, leading to a big increase in the number of tests conducted and, in turn, to faster achievement of a better-performing solution. For instance, enEvolv (which Zymergen acquired in 2020) creates chemicals, enzymes, and small molecules on the basis of an automated process that builds and tests billions of unique designs from many modifications of one DNA molecule.

AI and other advanced technologies speed up the learning stage, too. Companies compete on learning, and those that learn best and quickest win. With AI, companies can run huge volumes of data through machine learning algorithms that learn from the characteristics of the developed product and the test results. The algorithms can ascertain which type of product is opportune and which types are not, and can automatically return the results to the design stage via feedback loops. The rate of learning increases exponentially, with time scales plummeting from weeks or months to hours or even minutes.

Kebotix combines machine learning algorithms that model molecular structures with an autonomous robotics lab that synthesizes, tests, and feeds back the results to the algorithms. By applying heuristics, the algorithms adapt to the results from the lab, creating a closed loop for fast learning and simulation.

Four Moments of Truth: The Deep Tech Catechism

The US Defense Advanced Research Project Agency (DARPA) has produced major technological innovations (the internet and GPS are two) by thinking big and taking chances. But how does it decide which potential advances are worth pursuing, given the inherent risks of the effort?

Former DARPA director George Heilmeier assembled a series of questions known as the "Heilmeier Catechism" to help agency officials evaluate proposed research programs. Among the questions included: What are the limits of current practice? What is new in your approach, and why do you think it will be successful? If you are successful, what difference will it make? What are the risks? How much will it cost? How long will it take?

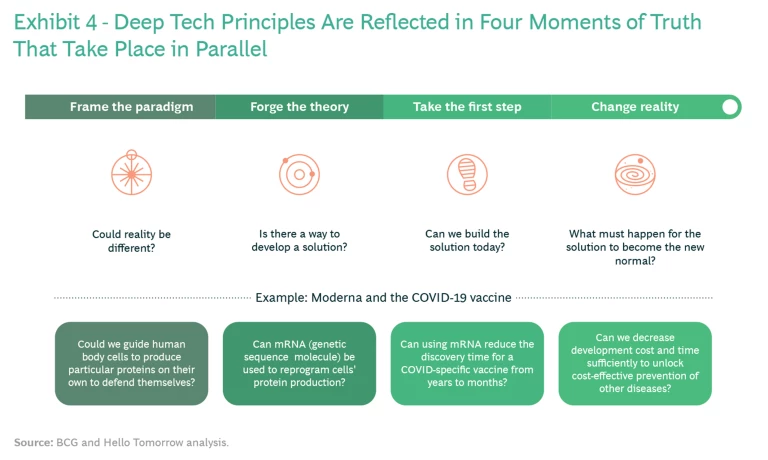

In a similar vein, deep tech ventures take shape across four moments of truth that occur in parallel, each posing a critical question (see Exhibit 4):

- Frame the Paradigm. Could reality be different?

- Forge the Theory. Is there a way to develop a solution?

- Take the First Step. Can we build the solution today?

- Change the Reality. What must happen for the solution to become the new normal?

Each of these four general questions requires restatement in the context of the specific venture under consideration to determine whether solving the problem through the deep tech approach is probable, possible, real, and profitable. For example, Moderna, the first company to develop a vaccine for the novel coronavirus and the second to bring it to market, faced these four moments of truth:

- Frame the Paradigm. Could we guide human body cells to produce particular proteins on their own to defend themselves?

- Forge the Theory. Can mRNA (genetic sequence molecule) be used to reprogram cells' protein production?

- Take the First Step. Can using mRNA reduce the discovery time for a COVID-specific vaccine from years to months?

- Change the Reality. Can we decrease development cost and time sufficiently to unlock cost-effective prevention of other diseases?

Moderna was successful because it found affirmative answers to each of these big questions. It also benefited from having a balanced mix of founders who possessed relevant scientific, engineering, and business skills. Moderna’s team included a venture capitalist, academic experts in stem cell research and regenerative biology and bioengineering, and a former biotech CEO. In addition, it tapped into a full ecosystem of investors, partners, and supporters, including the US government (DARPA and BARDA), big pharma (AstraZeneca, Merck, and Vertex), academia (Karolinska Institutet and Institut Pasteur), and foundations (Gates).

The challenge in dealing with the four moments of truth is that all of them must be addressed early on, more or less at the same time. The relevance of the question associated with each moment of truth will vary over time, but addressing all of them is essential to de-risking the endeavor by anticipating difficulties and adapting strategy and execution as needed.

Anticipating trouble is not specific to deep tech ventures, of course, but it is a key factor in successful deep tech ventures. The importance of addressing the four moments of truth from the outset is evident in the case of Seaborg Technologies, which is developing a new type of nuclear reactor—a compact molten salt reactor (CMSR)—that promises to function as a scalable, inherently safe, cheaper-than-coal, dispatchable power source by 2025. CMSRs address a major safety concern of nuclear power generation. Because they use molten salt (a fluid) as a fuel, instead of traditional solid fuel, their reactor cannot melt down or explode. Any breach of the reactor will result in leakage of the liquid fuel, which will then solidify without harmful release of radioactive gases to air or water. Seaborg delivers its CMSR in modular floating power plants, nuclear power barges, where the reactor can operate for 12 years without refueling. This permits deployment of the CMSRs with minimal focus on logistics and not-in-my-backyard concerns.

Seaborg addressed the fourth moment of truth—what it must do to change reality and enable CMSRs to become the new normal—from the get-go. By building floating power plants, Seaborg introduced a completely new approach to regulatory approval. A molten salt nuclear reactor embedded in a concrete power plant would take years to get regulatory approval. And in countries with limited expertise in the field, finding a local competent regulator (a regulatory requirement) would have entailed an equally lengthy process.

Historically, these regulatory issues have been a big hurdle to delivering nuclear energy in some areas of the world, including Southeast Asia (a region where solar and wind energy also face challenges). Seaborg’s nuclear power barge follows the regulatory framework of the American Bureau of Shipping’s New Technology Qualification process, a five-phase process that aligns with product development phases. The power barge can be built in a country with competent regulators, approved there, and then shipped to a location overseas.

Seaborg is still engaged in resolving its the third moment of truth. It identified the problem and framed the paradigm, finding a way to make safe, cheap, and clean nuclear energy possible. Now, it is addressing the question of building it today. Its unique approach to regulatory approval already supplies a big part of the answer: Seaborg’s power barge can become a new normal because it provides safe, cheap, and clean nuclear energy without having regulatory constraints limit the places and the speed at which it can be deployed.

The example of Seaborg’s CMSR barge highlights another important element of deep tech ventures. The fission physics underlying molten salt reactors is well established, and Seaborg is not innovating on that path. Instead, it is innovating through the convergence of different disciplines and technologies, combining neutronics and fuel dynamics with computational advances, looking at advanced materials to overcome corrosion and radioactivity resistance, and addressing the regulatory approval process in a novel way. Seaborg’s approach focuses on putting all of the pieces together to achieve its goal and, through this problem-oriented approach, adopting a new perspective to come up with innovative responses to the challenge it faces.

Four Challenges for Deep Tech

Despite its potential, deep tech must overcome multiple challenges in order to reach its full potential. Four challenges in particular stand out, with implications not only for deep tech ventures but also for all participants in the ecosystem:

- The need for reimagination

- The need to push science boundaries

- Difficulties in scaling up

- Difficulties in accessing funding

The Need for Reimagination. People are not always quick to see how science and technology can reshape processes or solve problems. It took businesses 20 years to rethink the factory floor after electric engines replaced steam.

For deep tech ventures, which are often based on applying a technological breakthrough to solve a problem, finding the right business framework can be a major challenge. Many struggle to identify a compelling value proposition through a clear reimagination of value chains and business models.

Often the principal challenge for large corporations that want to expand their innovation programs by engaging with deep tech is to imagine products and processes that come from a very different source or process. In previous decades, 77% of industry-leading companies were still leading five years later, but today, in a more dynamic market where continuous innovation and reinvention are the keys to success, this figure has almost halved to 44%.

In this regard, big companies may face the toughest challenge of any participants in the deep tech ecosystem. Apart from needing to understand the applications and use cases of emerging technologies in order to fully engage deep tech, big companies must leverage counterfactual as well as factual skills, cultivate playfulness, encourage cognitive diversity, and ensure that senior people are regularly exposed to the unknown.

The Need to Push Science Boundaries. Although science has made enormous progress in many fields, in many areas researchers are still only scratching the surface of understanding what is possible. In biology, for example, the complexity of nature is far from fully understood. And in chemistry, the complexity of nanoparticles as multicomponent 3D structures, for example, remains a big challenge for design and engineering.

Despite increasing interest in soft robotics (building robots from materials similar to those in living organisms), researchers have developed relatively few prototypes. The behavior of soft materials is difficult to comprehend and therefore hard to control and activate. Quantum computing has enormous potential, but multiple technical challenges hinder progress. Scientists have made enormous strides in AI and machine learning, but many issues are still unresolved.

Governments, universities, and startups can all work to push the boundaries of science and to translate technological capabilities into business applications.

Difficulties in Scaling Up. Deep tech companies develop fundamental innovations that often yield new physical products, but they may lack relevant scale-up experience.

Scaling up a deep tech physical product—and a related manufacturing process—can be complex and costly. In addition to establishing suitable physical facilities, ventures must overcome engineering challenges in a way that meets design-to-cost parameters. Corporations and governments can help—the former by lending expertise in engineering and manufacturing at scale, and the latter by serving as early test customers for the new product.

Difficulties in Accessing Funding. Investment in deep tech has risen in recent years, but the current investment model remains a hindrance. In particular, today’s widely followed venture capital model is insufficient in scope and unevenly weighted toward certain technologies such as AI and ML and life sciences.

Making things more challenging is the difficulty in deep tech of shifting from the laboratory stage (which tends to be grant- or subsidy-funded) to investment-based venture funding. Many private equity and venture capital funds are structurally constrained (by lifetime, size, and incentive limits) from investing in deep tech. Most do not have the talent to fully understand the science and technology risks. Moreover, many venture capital funds have lost their original “venture” mindset and instead follow the lead of others or make safer bets on more-established and better-understood technologies.

For all the harm it has done, the COVID-19 pandemic has also shined a spotlight on deep tech’s ability to solve a human problem of historic proportions—and to do it speedily, efficiently, and at relatively low cost. In its essence, the coming great wave brings a new approach to innovation by seeking to solve fundamental and complex problems.

Deep tech draws together three approaches (advanced science, engineering, and design) to master problem complexity and three technology domains (matter and energy, computation and motion, and sense and motion) to leverage their combined solving potential. Powering and accelerating this new innovation paradigm are the DBTL cycle and continuous learning.

The world faces other big problems, too, starting with climate change. Deep tech’s potential for disruption is unprecedented, and the breadth of problems it could address remains for us to uncover.