The effective use of benchmarks could be a key source of competitive advantage for investors and end users.

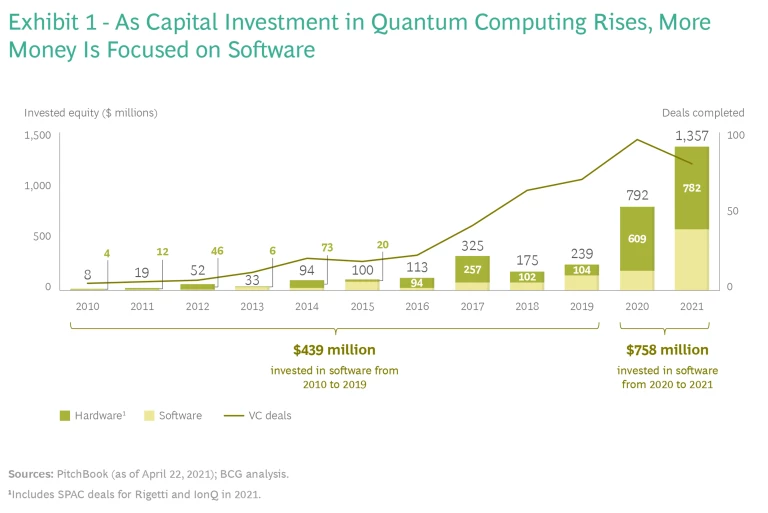

Interest and investment in quantum computing continues to rise. The technology attracted almost twice as much capital in 2020 and 2021 ($2.15 billion) than it had over the previous 10 years combined ($1.16 billion). While hardware still attracts the most money, software investments jumped nearly 80% in 2020 and 2021 compared with the previous decade. (See Exhibit 1.) These are significant indicators that practical applications are nearing. Gartner predicts that 20% of organizations will be budgeting for quantum computing projects by 2023.

There is still a long and expensive road ahead. To reach broad quantum advantage, we anticipate that an additional $10 billion must still be invested in developing hardware, and that end users will need to budget for quantum computing at twice the rate that they are today. As they assess the potential impact of quantum computing from a business and financial point of view, investors and end users have two big needs. One is business-related: How do they acquire the knowledge required to confidently invest in, or partner with, individual providers? The other is technological: What’s the best way to assess the maturity of individual providers’ technologies, and which hardware platform is best suited for each of many different potential applications?

Performance benchmarking can accelerate progress in quantum computing. The key is designing benchmarks that tell users what they need to know, can adapt to evolving technologies, and cover the relevant attributes.

Performance benchmarking—assessing the absolute and relative capabilities of different platforms or systems—has proved useful in assessing other

deep technologies

. We believe performance benchmarking can accelerate progress in quantum computing. The key is designing benchmarks that are useful (they tell users what they need to know), scalable (they can expand and adapt to evolving technologies), and comprehensive (they cover all the relevant attributes)—tricky business for such a radical and complex technology. That said, a number of organizations are already making headway, and we will likely see more benchmarking resources surface as the technology moves closer to market.

The Power and Complexity of Benchmarking

By providing an objective measure of performance, benchmarks can stimulate investment, promote healthy competition, and deepen collaboration. For example, it took more than 10 years and about $300 million to completely sequence the human genome. As recently as 2008, it cost a $1 million to perform the process for a single individual. The commercialization of genomics required innovative development of less expensive techniques that still met minimum accuracy thresholds.

To this end, the National Institute of Standards and Technology worked with a group of researchers, known as the Genome in a Bottle Consortium, to create a “reference genome” that could be used to verify the accuracy of new technologies. Other organizations, such as the Platinum Genomes Project and the Global Alliance for Genomics and Health, have since joined in the effort to create additional benchmarks, propelling development of next-generation sequencing platforms. As a result, the price of sequencing a complete human genome has dropped to about $1,000. By providing analytical validation of new approaches through benchmarks, investors and manufacturers were able to work together to enable exciting advances in personalized medicine and other applications, such as real-time COVID-19 variant tracking.

In machine learning, the application-centric MLPerf benchmark suite has become the de facto performance standard. These benchmarks measure the performance of graphics processing units on specific tasks such as image classification on a medical data set or speech recognition. By creating a set of best practices, it is easier and faster for enterprise developers to adopt this complex, advanced technology.

Benchmarking in quantum computing is more complicated than in other fields for four key reasons. The first is the sheer variety of quantum computing systems in development today. Each uses fundamentally different underlying technologies (from laser-controlled arrays of ions to microwave-controlled spinning electrons), and each has its own strengths and weaknesses. As a result, these technologies will develop at varying rates and have different milestones for achieving full effectiveness—a second hurdle. Taking a static picture of today’s market and benchmarking performance against this state will not necessarily be indicative of future developments.

Third, much of the potential of quantum computing lies in very different types of applications—simulation versus optimization, for example—whose solutions require different quantum configurations and therefore different forms of assessment.

Finally, useful benchmarks should enable comparison between quantum computing’s potential and our current computing capabilities. This is challenging because progress in classical computing continues not just through hardware advancements (which were steady and predictable thanks to Moore’s law) but also algorithmic advances (such as Google’s AlphaFold), which make more efficient use of that hardware.

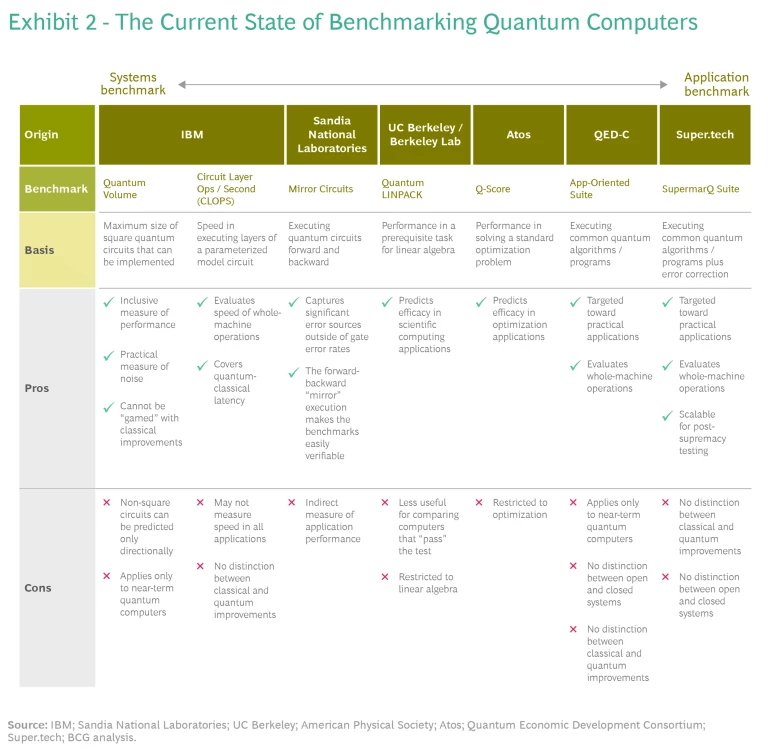

These four factors both make benchmarking quantum computers challenging and point to how critical effective means of comparison are for investor and end user decision making. One clear signal of their importance is the move by the US Defense Department’s Advanced Projects Research Agency (DARPA) to start a quantum benchmarking program “to establish key quantum-computing metrics and then make those metrics testable” in order to “better understand, quantitatively, the impact of quantum computers decades down the road.” Understanding the state of the technology today, identifying the hardware that’s best for a given use case, and comparing one hardware provider to another all require a very complex calculus. No surprise, then, that a number of companies, academic institutions, and industry consortia have emerged with solutions to simplify these comparisons from a systems and an applications perspective (See Exhibit 2.)

Two Types of Benchmarks

In its early days, classical computing faced some of the same benchmarking challenges, which were solved by developing two types of benchmarks. Early systems benchmarks helped users, vendors, and investors sort through a proliferation of manufacturers and computer systems by comparing base hardware performance with such metrics as instructions per second (IPS). Since these benchmarks tell only part of the story—a computer’s peak speed in IPS can differ significantly from its speed handling realistic workloads, for example—it quickly became necessary to develop application benchmarks to help compare computer performance for specific tasks. Today, we use a combination of systems and application benchmarks to identify the best solution for classical computing needs.

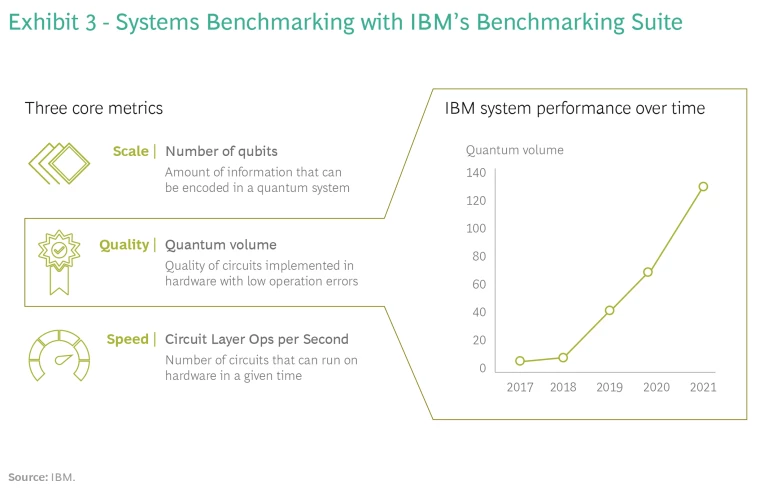

A similar dynamic is at work in quantum computing. System benchmarks measure fundamental characteristics of a quantum computer. For example, IBM has a suite of system benchmarks that cover scale (number of qubits), quality, and speed. (See Exhibit 3.) Quality is measured by what IBM calls “quantum volume,” a metric that that gauges the maximum size square quantum circuit that a quantum processing unit (QPU) can reliably sample with a probability greater than 67%. Speed, introduced in late 2021, is measured in circuit layer operations per second (CLOPS), which corresponds to how fast a QPU can execute circuits.

Such measures enable assessments of machine performance without regard to specific use cases and provide a clear record of progress. They are useful since many applications of quantum computing are sensitive to time-to-solution. For example, in the case of Shor’s algorithm, which is commonly used to benchmark computational power because of its inherent complexity, the best estimate predicts an eight-hour run time for superconducting qubits, while trapped ion qubits, which have a lower CLOPs value, are estimated to take one year—a massive difference between two alternative quantum computing technologies.

Systems benchmarks are the most descriptive and the most general benchmarks available. They enable technology vendors, code developers, and others to compare things like circuit depth, circuit width, and gate density. However, they are often hard for end users to employ, not only because they tend to be technical, but because they are general. By design, they abstract the devices from the contexts in which end users will use them.

Application benchmarks can be used to evaluate the performance of an entire system, thus providing significant value beyond merely assessing quantum advantage.

Enter application benchmarks. This approach provides a view of how well a quantum computer will perform at specific tasks. As such, application benchmarks can help end users and investors evaluate the performance of an entire system and measure performance based on real-world use cases, thus providing significant value beyond merely assessing quantum advantage. For example, while the quantum LINPACK benchmark measures the computer’s ability to perform a key prerequisite computational task for linear algebra, Q-score, a benchmark developed by Atos, measures performance on a full optimization problem by assessing the maximum number of variables a quantum processor can optimize.

We already have signs that application benchmarks are among the best tools to help companies determine which devices best meet their quantum computing needs. Application benchmarks have shown that Advanced Quantum Testbed’s quantum computer may outperform IonQ’s machine when trying to simulate quantum systems while the reverse may be true for combinatorial optimization problems.

Standards Will Evolve—If They Receive a Little Help

Until quantum advantage is clearly established for practical use cases, classical high-performance computers provide a standard to help users and investors track progress toward practical advantages in quantum computing. In addition, these comparisons help monitor efficiency in the classical components of a quantum computer since an efficient interface between classical and quantum devices is another prerequisite for quantum effectiveness.

That said, as the new technology continues to develop, quantum computations will eventually outpace classical yardsticks. Future benchmarks will need to be able to track progress purely by comparing quantum devices against each other. As Joe Altepeter, program manager in DARPA’s Defense Sciences Office, has said, “If building a useful quantum computer is like building the first rocket to the moon, we want to make sure we’re not quantifying progress toward that goal by measuring how high our planes can fly.”

We predict that application benchmarks will be a key focus in accelerating progress for the quantum computing market, encouraging and helping to prioritize investment. But—and this is a significant but—this will require manufacturers to permit their machines to be benchmarked for public review. Hardware providers will need to adopt an ethos of openness if the concept of benchmarking as a “progress accelerator” is to be kicked into high gear.

The Suite Spot

Investors and end users need to evaluate quantum machines from the point of view of their specific goals. Given the myriad potential applications for quantum computing, it is important to develop a suite of application benchmarks for a broad range of use cases.

A few organizations are active in the development of benchmark suites. The US Quantum Economic Development Consortium (QED-C), which was established as a result of 2018’s National Quantum Initiative Act, has developed a suite of application benchmarks targeted toward practical applications and based on executing common quantum algorithms or programs.

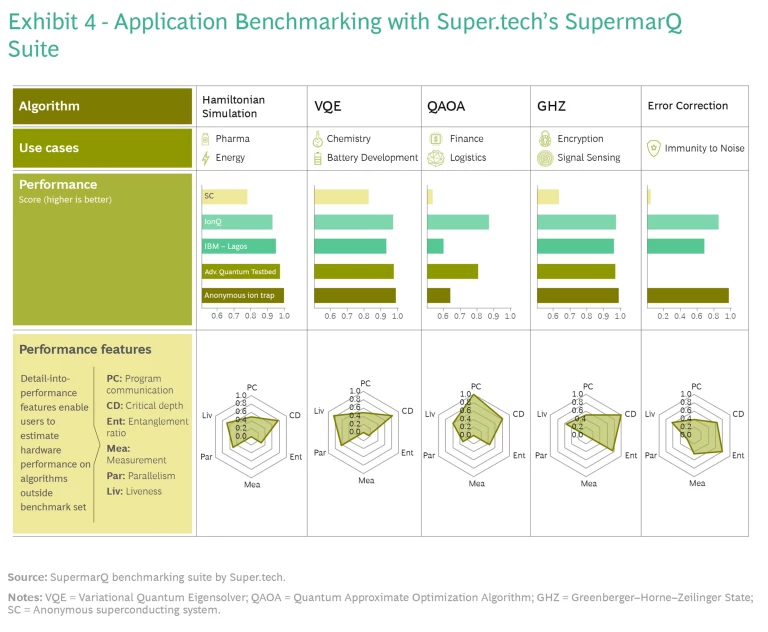

Another example is SupermarQ, a new suite of application benchmarks from the quantum software startup Super.tech that applies techniques from classical benchmarking methodologies to the quantum domain. Four design principles—scalability, meaningfulness and diversity, full-system evaluation, and adaptivity—shape the selection and evaluation of applications, which cover a range of algorithmic approaches relevant to real-world use cases in finance, pharma, energy, chemistry, and other sectors. (See Exhibit 4.). These algorithms cover most of today’s use cases. But sophisticated developers can also refer to performance features (such as critical depth or entanglement ratio) that help them project how each of the benchmarked systems (IonQ versus Advanced Quantum Testbed, for example) would perform on any algorithm, even custom algorithms outside the benchmark set. SupermarQ also features an error correction benchmark, a proxy for practical readiness that indicates how far a device has to go on the road to fault tolerance.

The Critical Decision Factors

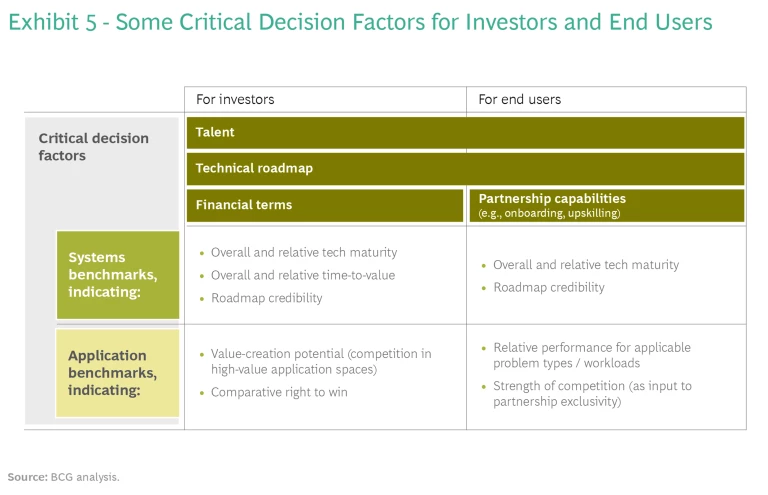

When assessing quantum computing technologies and the companies behind them, investors and end users must consider a series of critical decision factors. (See Exhibit 5.) Each should assess the rigor and ambition of the provider’s technical roadmap and the talent and reputation of the provider team. The financial terms of any deal are key for investors, of course, while scoping the full benefits of a partnership, including such issues as talent sharing and onboarding programs, are important concerns for end users.

As quantum computers mature, benchmarks on existing hardware will become a more important part of the calculus. For example, investors can use systems benchmarks to gauge tech maturity and potential time to value. They can use application benchmarks to gauge the value creation potential of a given technology (for instance, whether it is in the lead in applications with use cases in high-value markets like financial services). End users will want to leverage application benchmarks to decide which hardware yields the best performance on algorithms relevant to their workloads (such as the Variational Quantum Eigensolver for chemistry). Individual funds and companies may have their own criteria as well.

Once they set their priorities, investors and end users should develop a comprehensive but measured approach that balances system and application benchmarks, leveraging the work of organizations such as IBM, Super.tech, QED-C, and others to determine which computers best align with their workloads. Similarly, investors can identify which benchmarks are best suited for solutions in which they want to put their money and track those metrics to measure progress over time.

Breakthroughs in quantum computing have accelerated exponentially in recent years. But these rapid advances are accompanied by both skepticism and misleading hype. The effective use of benchmarks will be a source of competitive advantage for investors and end users. Trustworthy benchmarks can also accelerate progress across the entire ecosystem by stimulating investment in the right technologies, promoting healthy competition and deepening collaboration, much as they did in genomics and machine learning, Effective benchmarking is critical to both celebrating true advances and mitigating the impact of misleading information that could undermine the interests of potential investors and users.

The authors are grateful to IBM and Super.tech for their assistance with this article.