Recent advances in generative AI have created an intense challenge for companies and their leaders. They are under commercial pressure to realize value from AI quickly and public pressure to effectively manage the ethical, privacy, and safety risks of rapidly changing technologies. Some companies have reacted by blocking some generative AI tools from corporate systems. Others have allowed it to develop organically under loose governance and oversight. Neither extreme is the right choice.

The AI revolution is still in its early days. Companies have time to make responsible AI a powerful and integral capability. But management consistently underestimates the investment and effort required to operationalize responsible AI. Far too often, responsible AI lacks clear ownership or senior sponsorship. It isn’t integrated into core governance and risk management processes or embedded in the frontlines. In this gold rush moment, the principles of responsible AI risk becoming merely a talking point.

For the third consecutive year, BCG conducted a global survey of executives to understand how companies manage this tension. The most recent survey, conducted early this year after the rapid rise in popularity of ChatGPT, shows that on average, responsible AI maturity improved marginally from 2022 to 2023. Encouragingly, the share of companies that are responsible AI leaders nearly doubled, from 16% to 29%.

These improvements are insufficient when AI technology is advancing at breakneck pace. The private and public sectors must operationalize responsible AI as fast as they build and deploy AI solutions. As to how companies should respond, four themes emerged from the survey and from two dozen interviews with senior leaders. (This article summarizes a fuller treatment of the findings in MIT Sloan Management Review.)

CEO Engagement Matters

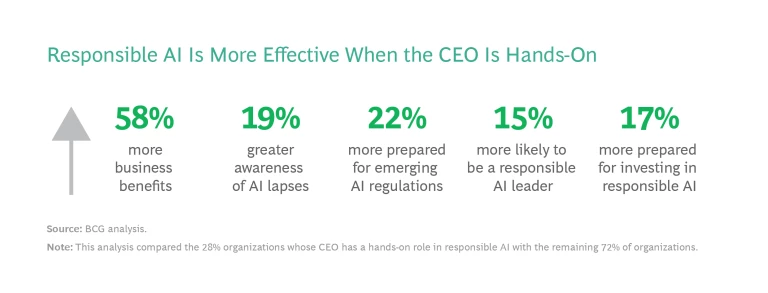

Of the companies in our survey whose CEO is actively engaged in responsible AI, nearly four out of five—79%—reported that they are prepared for AI regulation. (See the exhibit.) That share drops to 22% among companies whose CEO is not involved.

Slightly more than one-quarter of CEOs—28%—play a hands-on role in responsible AI programs through hiring, target setting, or product-level discussions. These companies report 58% more business benefits from their responsible AI activities than other companies.

Engaged CEOs can help sustain the investment and focus that responsible AI requires. They also ensure clear lines of authority and decision rights. Today, responsibility is often distributed among legal, compliance, data analytics, engineering, and other functions. As they do in cybersecurity and ESG, CEOs can amplify the effectiveness of responsible AI programs by using their megaphone and influence.

Third-Party Tools Are Necessary but Must Be Carefully Managed

More than three quarters of companies—78%—reported that they rely on external AI tools and services; more than half—53%—have no internal AI development, so they rely exclusively on AI vendors.

AI is quickly becoming a scale business. Relatively few organizations have the talent and resources to compete, so even those with strong AI capabilities frequently require third-party tools to meet specific needs. But these tools can be risky: they are implicated in 55% of AI failures, ethical or technical lapses that can land a company in regulatory, PR, or other kinds of trouble.

Vigilance can help prevent such mishaps. Our survey found that the more a company analyzes third-party tools, including vendor certification and audits, the more AI failures they discover. Unfortunately, companies are doing too little preventive oversight. Two thirds—68%—perform three or fewer checks on third-party AI solutions, so the true failure rate is likely much worse.

Companies can rely on existing third-party risk management processes to evaluate AI vendors, but they also need AI-specific approaches like audits and red teaming. These approaches need to be dynamic and flexible, because AI is changing rapidly.

Responsible AI Takes Time and Resources, So Start Now

Generative AI has democratized AI by putting it into the hands of all employees, not just the tech cognoscenti. This access exposes the challenges of shadow AI—unauthorized development and use that are unknown to the company’s management and governance teams.

Responsible AI needs to be built into the fabric of the organization. As with cyberattacks, human error contributes to many AI failures. Improving responsible AI awareness and measures requires changes in operations and culture. These changes take an average of three years to implement , so companies need to get to work.

What’s Good for Society Is Good for Business

Many countries, and even local municipalities, are contemplating AI regulations. It pays to get ahead of them. Heavily regulated industries—health care and financial services, as well as the public sector—report higher levels of responsible AI maturity than other industries. They have stronger risk management practices and fewer AI failures.

But treating AI only as a compliance problem misses the mark. Responsible AI enables faster innovation and minimizes risk. Farsighted companies will ride the coming wave of regulation by following responsible business practices. They will satisfy lawmakers, shareholders, and the public with products and services that are innovative and safe. Remember, racecar drivers go fast because they trust their brakes.