As generative AI (GenAI) and other disruptive technologies rapidly transform the business landscape, companies are recognizing the strategic imperative of workforce reskilling . Indeed, according to a 2023 BCG survey , 75% plan to make significant investments in talent retention and development. Yet historically, even significant investments in upskilling programs often yielded disappointing results. One key reason is that companies haven’t found a reliable way to measure their programs’ impact, despite many attempts to create such mechanisms over the past 50 years.

Through our work with clients, we have developed a three-step approach to help companies better measure “return on learning investment” for some dimensions of organizational upskilling programs. ROLI enables companies to: 1) identify upfront the business outcomes or impact they are looking to achieve; 2) define the metrics they will use to hold the program accountable to that impact and measure progress; and 3) determine whether that impact has been achieved.

With these ROLI principles in mind, companies can design

upskilling programs

with demonstrable impact on revenues, costs, customer satisfaction, and innovation speed, ultimately improving margins and delivering on other business objectives.

The Challenges with Traditional Approaches

It’s not hard to see why companies traditionally have had limited success measuring the impact of their upskilling programs. First, the measurement mechanism itself is very difficult. It’s one thing to track the time an employee spends in an upskilling program. But tracking them through their workflow to determine how they’ve applied their new skill sets is another thing altogether.

Even if it were possible to determine that a person’s performance had improved since the upskilling program, it’s not easy to distinguish correlation from causation. Since controlled studies aren’t realistic, it’s hard to know whether an upskilling program was responsible for the change or if other factors, such as motivation, manager input, or market dynamics played a role.

Another issue is that the impact of any single upskilling program isn’t typically immediately apparent. That’s because the upskilling cycle takes time—both acquiring the skills and then putting them to work in a way that has a business impact. And because leaders have different motivations for investing in upskilling, there isn’t a universally recognized measure of success or even an approach for tracking impact. Some leaders look for productivity improvements, others look for better retention, and still others look to boost their brand.

Despite these challenges, organizations that make a clear connection between the skills being learned and specific KPIs can measure the impact of upskilling programs on their own employees; the maturity of the ecosystem (how fast the organization can upskill and adapt compared with its competitors); and business performance.

Even if it were possible to determine that a person’s performance had improved since the upskilling program, it’s not easy to distinguish correlation from causation.

While all three are undeniably important, the business impact is paramount, given the substantial investment needed to build scaling capabilities. For example, an upskilling program to facilitate a digital transformation in a global company can require an investment of millions of dollars. Only programs that will arguably unlock meaningful value for employees and the enterprise will get the green light.

Return on Learning Investment

The ROLI approach can help address these challenges and help the C-suite and other leaders track the effectiveness of upskilling interventions through a three-step process.

Identify the target business impact. Before embarking on any upskilling program, organizations first need to establish the business impact they will measure after the program is over. This step is key because no two programs will have the same objectives or expected impact. Each program we’ve built and deployed with a client has had a unique ambition meant to support the company’s particular business strategy and people objectives. Some clients wanted to quantify the business value of specific GenAI use cases within the context of the upskilling program, while others were more interested in general upskilling in topics such as climate and sustainability. Still others, like BCG U’s own Rapid and Immersive Skill Enhancement (RISE) Program, build high-demand business and digital skills to enhance employability in sought-after fields. In our experience, most companies’ impact targets (except for compliance) fall into one of four categories, as follows:

- Specific business objectives, such as delivering on a digital transformation, enabling AI use cases, transforming the frontline field force, boosting customer satisfaction, and broadly positioning the company as a magnet for top talent

- Productivity, including anything that makes employees perform better in their roles

- Retention, such as specific target reductions in attrition, whether across the board or in specific roles

- Speed to mastery, for example, the number of days saved in an employee’s development, from onboarding to full mastery

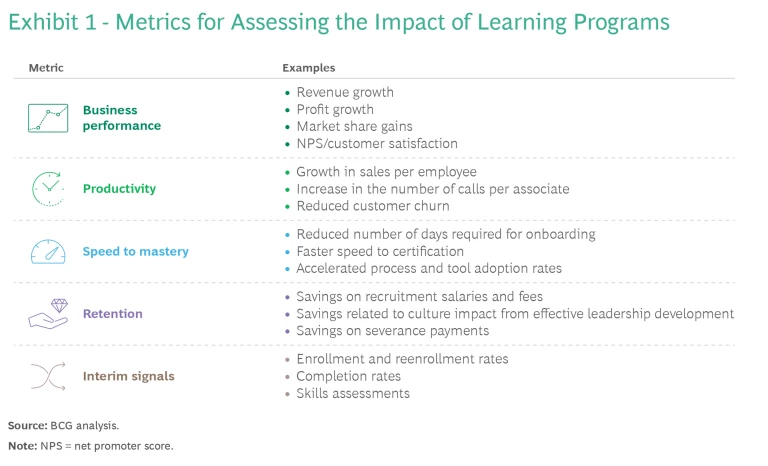

Define metrics and milestones. Next, organizations need to define the time-bound metrics they will use for holding the program accountable for the desired impact. Priority should be given to metrics that best capture the targeted end results—the specific business objectives, productivity, and so on. (See Exhibit 1.)

But it’s also critical to measure any progress that is made in the course of a program or soon after it ends. Such progress is best captured through what we call “interim signals of impact.” These are essentially leading indicators that predict outcomes, in the same way that the number of times a product ad is viewed can be used to predict higher sales. It’s important to include such signals since it will likely take some time for the impact to fully manifest.

This is where organizations can best put traditional metrics to use. For example, enrollment and reenrollment rates can provide a sense of progress, as do periodic skills assessments.

Determine whether the impact has been achieved. The third step is to assess whether the upskilling program has achieved the targeted impact. This can be challenging to do because not all programs need to be measured with the same level of rigor. Determining the level of rigor depends on the importance of the upskilling intervention. For example, a company-wide AI upskilling program would likely need to be tracked very rigorously with A/B testing, which is a data-driven way to compare the value of different programs. By contrast, a lunch event meant to help employees get the most out of their work-from-home setup could require a simple employee survey.

Assess whether the upskilling program has achieved the targeted impact. This can be challenging to do because not all programs need to be measured with the same level of rigor.

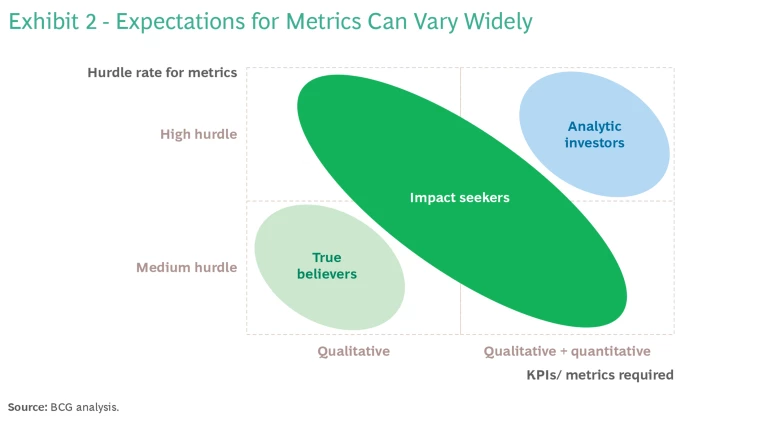

The expectations of the business leaders funding the program will likely also play a role. (See Exhibit 2.) Some leaders are what we call “true believers”: They are convinced that investment in upskilling has impact and so are satisfied with qualitative evidence. Other leaders are more skeptical—these “analytic investors” will commit only if there is unambiguous proof that it will pay off. And then there are those in-between the two—the “impact seekers” who are aware of the difficulties of capturing impact and so are open to using a mix of methods.

For all these reasons, leadership teams should think about the level of rigor that’s needed as they define the metrics they’re going to use to evaluate the program.

In the case of a global energy company implementing a large-scale retail transformation, the major agents of change were frontline leaders who needed to be upskilled to run the complex initiative. After developing an upskilling program, the company used A/B testing to measure the program’s effectiveness on a representative group of frontline leaders participating in a pilot. Success was measured with a short list of core KPIs. Comparing the performance of the pilot and baseline groups, the company found that the pilot demonstrated success across all the KPIs, including profitability, which improved by 3%. The clearly high ROI made it an easy decision to scale the program across the entire organization.

Getting the Most out of ROLI

The specific calculations will of course vary with the company’s business goals and the types of interventions used. (For an explanation on how to do an actual ROLI calculation, see the sidebar.)

Calculating ROLI

Regarding the denominator, there are several ways to carve up the related costs. For example, companies could take a value chain view, looking at costs in terms of skills diagnosis, design, delivery, and ongoing maintenance. Costs can also be assessed by categories such as people and technology, internal and external, or direct and indirect. The simplest way to derive costs is to consider components such as the following:

- The team managing the entire upskilling value chain

- Vendors, such as trainers

- Technologies

- Direct upskilling program delivery costs

- Reduced employee productivity due to insufficient program attendance

The next step is to meaningfully quantify all the other ways impact can occur via interim impact metrics. To simplify things a bit, it’s helpful to think first about ROLI in the context of a specific program rather than the entire organization. For each discrete program, ROLI is equal to the direct impact of the program (such as the boost in productivity of select employees) divided by the direct costs of the program (such as the cost of upskilling). Using this approach, companies can calculate the ROLI across multiple discrete programs.

To calculate ROLI for the entire organization, we recommend that companies continue to calculate ROLI at the discrete program level and add an overall benefits and costs analysis into the mix if it’s possible to gather that data. This is a challenging endeavor because of all the moving parts, so companies should proceed with caution.

This approach allows companies to calculate a more complete ROLI on individual programs, yielding more-tangible outcomes than if they simply allocated a portion of overall costs to individual programs.

But we believe that ROLI does a good job of capturing business impact for most companies. To get the most out of this approach, organizations should keep in mind the following:

Understand that not everything needs to be measured. We recognize that there are many upskilling programs that leaders will invest in no matter what. For example, leadership development programs meant to create a culture of learning might be so tricky to measure that the organization could end up spending more time on measurement than actual implementation.

Make collaboration a top priority. Business impact cannot be measured in a vacuum. Teams need to work together across the life cycle of the program, from defining the desired business impact to assessing the outcomes. Note that even when there are clear KPIs, it can be hard to draw a straight line from the intervention used to the associated business outcomes. If there is total stakeholder buy-in upfront, the outcomes won’t come as a surprise to anyone.

Teams need to work together across the life cycle of the program, from defining the desired business impact to assessing the outcomes.

Be realistic about time limits. Everyone wants the upskilling program to prove its impact immediately, or at least during the intervention itself. Often, however, the improvement will not be evident until KPIs are reviewed at year-end or even later. Still, we recommend that companies set a time-bound target and stick to it: having clear KPIs and a target date of completion can go a long way.

Although measuring the business impact of upskilling investments is challenging, organizations should persist in their attempts to evaluate those returns, just as they measure other business investments. Implementing ROLI wherever possible is a powerful way to start.

Do you have a story about how you effectively (or ineffectively) measured the impact of upskilling? We’d love to hear what you learned in the process, and if you have any examples that illustrate the impact from scaling capability building in your organization. Please feel free to reach out to one of our experts or to BCGU@bcg.com .