While there is broad consensus that artificial intelligence will transform most industries in the decades ahead, many of the companies that have invested in the technology have failed to unlock its full potential. Previous BCG research has shown that only 11% of these companies have released significant value, and the majority have failed to scale AI beyond pilots. Nor have companies reimagined the way they work with data or redefined human–AI interaction. Moreover, they have only selectively explored AI use cases and often lack a mature digital foundation , although there are significant differences across companies and industries in this regard.

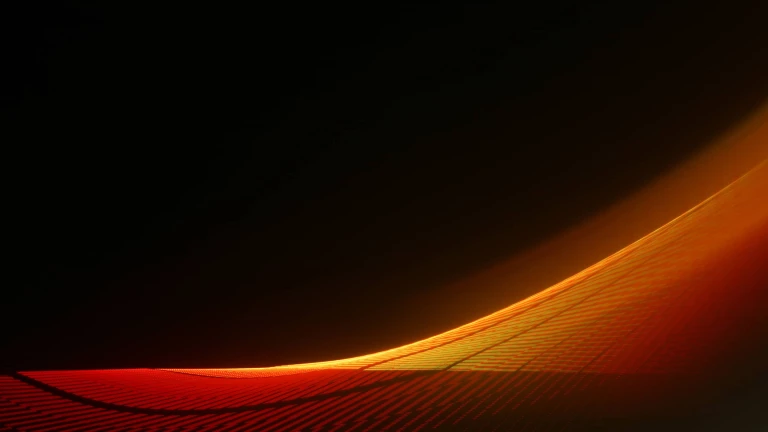

Our latest research, based on BCG’s Digital Acceleration Index , shows that companies that invest more in digital put more resources into AI; moreover, for those that are able to scale up AI, even small seed investments pay off big. (See Exhibit 1.) Modest investments in specific AI use cases can generate up to 6% more revenue, and with rising investments, the revenue impact from AI triples to 20% or more. Leading companies outperform on other KPIs as well. They yield 3 percentage points more EBIT, a lift of almost of 30% compared with companies that fail to scale.

What, exactly, are these companies doing right?

A Matter of Scale

We assessed the AI maturity of more than 2,700 companies in three areas: AI use cases, AI capabilities, and the company’s digital foundation. (See “About Our Research and BCG’s AI² Acceleration Index.”)

About Our Research and BCG’s AI² Acceleration Index

We asked survey respondents to allocate their current portfolio of AI projects in six functional categories—

supply chain

, enterprise (

HR analytics

, for example),

manufacturing

,

marketing

and customer experience,

products

and offers (including pricing), and

risk

—according to the following four maturity stages:

- Ideas in Development. Solutions being defined or in development.

- Pilot MVPs. Solutions with the right features to attract early-adopter customers but not yet creating value for the organization.

- Operational. Solutions creating some financial value but whose use is still limited to individual departments.

- Scaled. Solutions deployed across the entire organization with tested frameworks and governance delivering proven financial value.

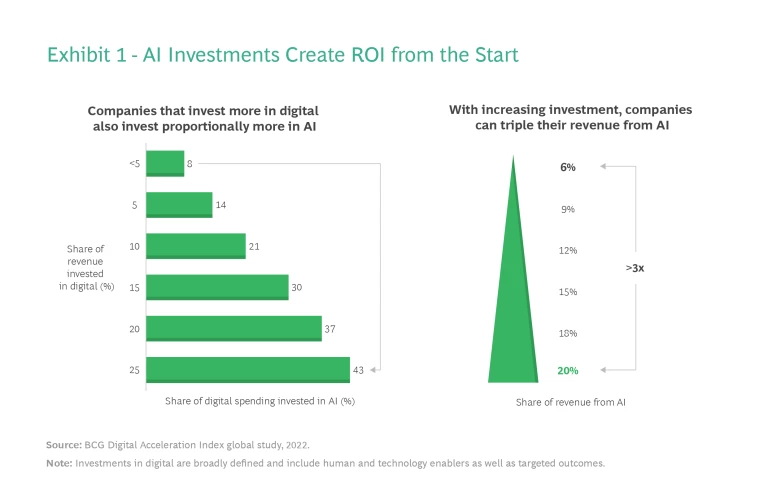

Even though we found that scaling use cases is key to generating and sustaining value from AI, most companies do not yet leverage the full potential of this approach, scoring only 35 to 45 out of 100 possible points. (See Exhibit 2.) The results are fairly consistent across industries. (With an overall score of 42, consumer goods companies are strongest and, at 36, public sector the weakest.) The results are also in line with our March 2022 assessment of companies’ digital scaling capability , which is not a surprise since, as we observed then, the ability to scale digital use cases is a prerequisite to scaling up AI.

Success is partly a matter of investment. AI leaders invest an average of 4% of revenues in AI-specific initiatives, while laggards invest only 2.7%. Some industries exhibit particularly wide gaps; in health care and energy, for example, AI leaders invest around twice as much as laggards.

Companies undertake AI use cases across the value chain, but they do so unevenly, most likely because they prioritize those with the highest ROI. Of the six functional categories that we examined, marketing and customer experience, including personalization, sees the most dynamic activity. Technology and telecom companies report implementing the highest average number of use cases (46 and 56, respectively) in this function. More typically, across all sectors, companies report 20 to 25 use cases being explored in functions such as manufacturing and operations and products and offers (pricing, for example). Supply chain and enterprise-wide functions such as HR analytics show the fewest active use cases, perhaps because they are not yet mature enough to generate substantial value or are too difficult to implement.

How Winners Stand Apart

Investment and value chain focus tell only part of the story, however. Our research revealed that the leaders in scaling and generating value from AI do three things better than other companies:

- They prioritize the highest-impact use cases and scale them quickly to maximize value.

- They make data and technology accessible across the organization, avoiding siloed and incompatible tech stacks and standalone databases that impede scaling.

- They recognize the importance of aligned leadership and employees who build and leverage AI, and they support staff who promote collaboration and end-to-end agile product delivery.

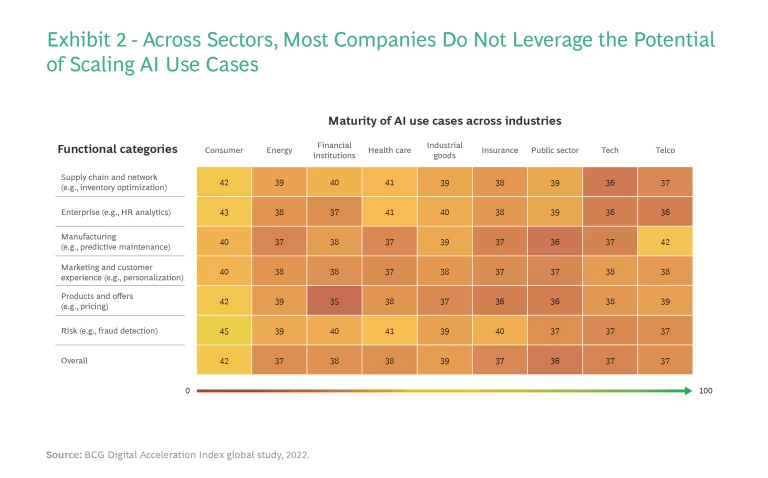

Use Case Prioritization and Scaling. Leaders distinguish themselves by scaling more than twice as many AI use cases—in large part because they scale them two to three times faster. (See Exhibit 3.) Leaders move from idea to execution at scale in a matter of months—typically just 5 to 7—while other companies take an average of 15 to 17 months. As a result of this speed advantage, leaders are able to scale up 44% of the use cases in their portfolios, more than twice the 19% of other companies.

These companies typically share three common approaches. First, they select AI use cases based on business priorities, with a rigorous focus on value. This often involves building a dedicated organizational unit to orchestrate and accelerate scaling.

Second, they establish an enablement function to ensure that, as new skills and capabilities are developed, they are available to the teams that need them across the organization. In some instances, the enablement function may assign dedicated teams to develop needed capabilities that are lacking.

Subscribe to our Artificial Intelligence E-Alert.

Third, they employ a consistent execution model, with AI use cases running through agile build and validation cycles. Prototypes collect early end-user feedback and lead to the creation of MVPs, which add features and users as they are scaled and integrated into the operating model. Ultimately, use cases and their operating models are deployed across the organization.

Management teams should ask themselves three questions about their ability to prioritize and scale use cases:

- Do we have a systematic approach that prioritizes scaling of use cases based on value?

- Do we build use cases using a consistent agile execution model that allows for accelerated scaling?

- Do we track the use cases in the pipeline against clear objectives and key results?

Accessible Data and Technology. Leaders make data, technology, and algorithms available for use by teams across the organization. Other companies maintain siloed tech and data that impede scaling; common barriers include the use of individual data sets rather than a single accessible data pool, separately built and incompatible tech stacks, and inconsistent or redundant algorithms. Companies that make more than 75% of technology and data available have a 40% greater likelihood of realizing AI use cases at scale than those that make 25% or less widely available.

A modular data and digital platform serves as the technical foundation for data accessibility and allows for rapid release cycles of AI use cases. The platform typically uses cloud infrastructure, core systems, and clearly defined interfaces. In addition, data models are built to support all core workflows. For example, a data model for an AI-powered sales process would ensure accessibility of the relevant data during the presales phase, negotiation, deal closing, and delivery.

Three questions that management teams should ask about the accessibility of data and technology:

- Have we implemented a data and digital platform that uses clearly defined interfaces that allow teams to access and process data across the organization?

- Have we identified the data assets that provide competitive advantage, specified ownership and quality assurance , and organized them into data models that can be utilized along the full value chain?

- Have we made algorithms, and the software they are embedded in, available across the organization to avoid inconsistencies and redundant work?

Leadership and Talent. AI leaders recognize that putting the right people in the right roles is a critical foundation for success. By emphasizing human as well as technological capabilities, they seek to facilitate organizational and machine learning . The companies that dedicate 10% or more of their digital staff to AI-specific roles, and that have 30% or more of their staff utilizing AI solutions on a daily basis, generate more than twice the added EBIT (11%) of those that dedicate human resources below these thresholds. In addition, companies with aligned leadership, strong interbusiness unit and interfunctional collaboration, and end-to-end agile product delivery increase their AI use case maturity by an average of about 25%. Aligned leadership is essential to setting priorities, such as trimming a large number of initiatives down to few high-potential use cases that promise the most value and organizing them into an integrated roadmap.

Three questions to ask about leadership and talent:

- Have we taken all the relevant steps to attract the necessary AI talent—for example, by leveraging talent ecosystems and strengthening our employer value proposition with a focus on technical skills?

- Have we empowered the organization to build foundational technical and analytical skills and to set up a creative learning environment that fosters the use of AI solutions on a daily basis?

- Have we created an organizational environment in which teams are able to make decisions, generate new ideas for leveraging AI, and take risks, knowing that they are supported by an aligned C-suite?

AI Scaling in Practice

Two examples illustrate how leading companies put the measures described above into practice.

A European industrial goods company with global reach had made earlier attempts at digital transformation and had hundreds of digital, automation, and AI projects in the works. All were lodged in functional silos, with different operating models and lacking strategic guidance and direction. The company had made significant investments in “data platforms” managed by its IT function but had seen no financial impact, in part because it had no overarching plan or approach.

A new head of digital transformation, who reported to the CEO, had a strong operational background. He took a fundamentally different approach, comprising a half-dozen guiding principles:

- Anchor the case for change and the AI priorities at the top.

- Define the target state and the objectives and key results for top-priority cross-functional domains.

- Prove value first, then scale the capability incrementally.

- Involve people with both business and technical expertise.

- Ensure senior-management involvement to help embed change at the frontline.

- Initially locate the work in an accelerator unit outside of IT and bring it into the full organization when mature.

Senior management identified ten "lighthouse" use cases that would generate value and form the basis for a refreshing of the company’s data platform and IT operating model and organization. The use cases forced the company to identify the data assets that provided competitive advantage and establish a single, accessible database. They also helped specify the requirements for the data platform and overall architecture.

As the company eliminated the barriers to data access, it established a stronger foundation for developing and scaling the selected AI use cases. From the lighthouse pilots, a team dedicated to enablement identified missing technical capabilities and skills, such as expertise in cloud platforms, and supported the creation of new teams to fill the gaps. It also established a consistent agile execution model for developing and scaling the use cases. Over time, momentum grew as more data and tools became available across the organization, leading to a stronger foundation for developing future use cases.

A global consumer goods company operating in more than 150 markets was determined to use AI to boost revenues and profitability. It started from a position of low digital maturity, with limited data science capability and no digital use cases operating at scale. It identified 20 initial AI use cases, from which it prioritized three AI solutions to pilot:

- Marketing Budget Allocation. Where to invest in advertising and marketing to maximize return.

- Sales Force Effectiveness. Which stores to focus on in any given week and the next best action for each one.

- Product Promotion and Pricing. The optimal product price and most effective promotion calendar to maximize sales and margins in the next year.

The company framed a business case and roadmap to scope its ambition and prioritized use cases based on feasibility, size of the prize, time to impact, and selected pilot countries. It then defined the data and IT architecture that it needed to build, designed a modeling approach, and tested MVPs in two or three pilot markets for each use case. As it proceeded, the company built out a data and tech platform that enabled scaling of use cases, global data hubs, and a cloud-based technology stack. It also developed the necessary human capabilities, including a dedicated central organization to accelerate the building and deployment of AI solutions, recruitment of about 70 data and digital experts, and launch of an ambitious “upskilling” program structured around data management, AI, and agile product ownership.

About eight months after launching its initial MVP, the company was able to deploy AI solutions in more than ten markets covering a majority of its sales and embed them in its IT systems. The program achieved results in line with financial and organizational benchmarks targeted at the outset, and all deployed AI solutions had a positive impact on financial performance.

Even small investments in AI can pay off. But making AI work requires targeting and discipline—and a focus on human skills as well as technology. A well-planned approach based on building the digital foundation and capabilities to scale up AI use cases can serve as a powerful and profitable accelerant.