Digitization is fueling innovation in the industrial manufacturing production process, enabling firms to achieve high-quality standards without sacrificing process time. Analytics embedded in digitization are at the forefront of delivering positive commercial impact across the manufacturing value chain. One clear example of the benefits of embedded analytics is the transformation of traditional operations within the steel industry. IT infrastructure, computational power, advanced analytics techniques, and new technology deliver competitive advantage to this and other process-based manufacturing industries.

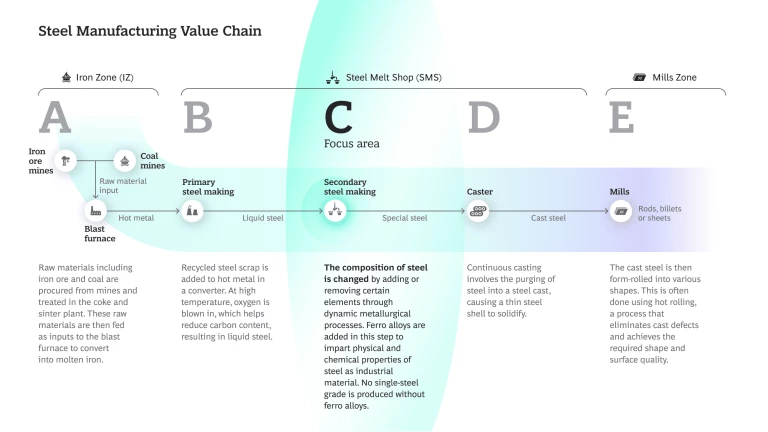

This advantage is particularly significant in secondary steel making, when the composition of steel is changed by adding or removing certain elements through dynamic metallurgical processes. During these processes, ferro alloys (FA) are added to impart specific physical and chemical properties to meet customer needs for specific grades of steel. These additions consist of various iron alloys combined with other elements such as manganese, aluminum, chromium, and silicon.

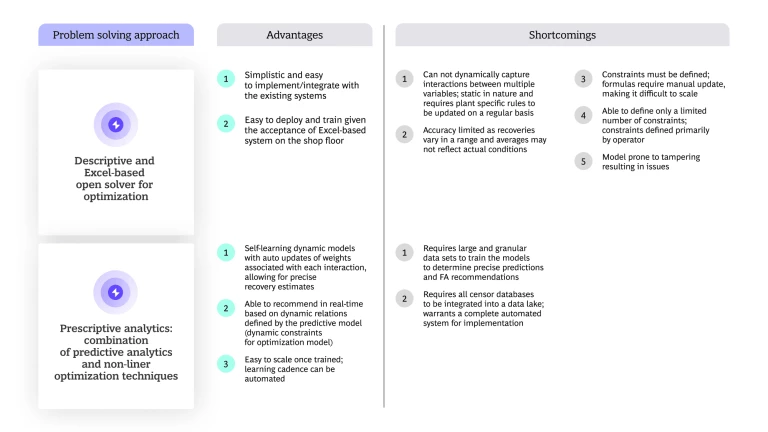

The process of adding or removing these alloys is vastly complex. If steel manufacturers are to attain high standards while increasing efficiency and reducing costs, they must optimize the process by using analytics capable of guiding the process of FA addition. Many manufacturers do so my adopting various modeling techniques. How mature these techniques are depends on each manufacturer’s technological maturity.

Dynamic, self-learning models reduce time and cost

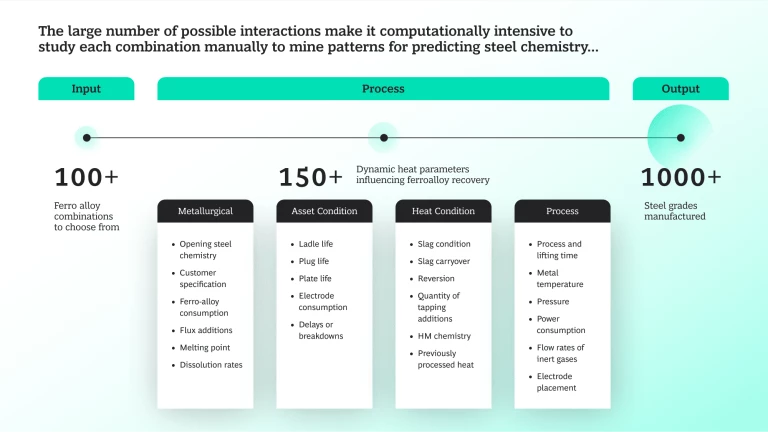

Making steel is, in some respects, like adding sweetener to a cup of coffee. You start with a cup of coffee, add a certain amount of sweetener (sugar, honey, artificial sweetener, etc.), stir the coffee, taste it, and then adjust the sweetener as needed. When making steel, you start with molten steel, add a combination of ferro alloys, allow them to mix into the liquid steel, test a sample to see if the chemistry meets the customer's requirements, and then adjust as necessary. The difference between the two is that, with more than 100 possible ferro-alloy combinations and more than 150 dynamic heat parameters, achieving the target steel mixture is infinitely more complicated.

Less technologically mature manufacturing plants manage this process through a combination of spreadsheets and operator experience and intuition. As a result, these plants often must repeat the add/mix/test cycle numerous times to achieve the desired steel chemistry. Dynamic, self-learning models based on predictive and prescriptive analytics, on the other hand, can rapidly predict the end chemistry and prescribe the specific amount of FA needed to achieve the specific outcome. Manufacturers that take this approach have reported 2-3% improvements in process time and 2-5% reductions in ferro alloy-related costs.

The Value-Process Delivery Map

Advanced analytics techniques can be leveraged to quickly establish a relationship between FA-input data and steel-chemistry output data to arrive at a set of values that would predict the optimum chemistry, while adhering to certain real-world constraints and ensuring successful value delivery. To achieve this outcome, we recommend a six-step process comprised of:

- Value-stream mapping

- Process streamlining

- Predictive analytics

- Trials and fine tuning

- Prescriptive analytics (optimization)

- Implementation

Steps 3, 4, and 5 involve specific aspects of analytical modeling that we will explore in greater depth. Those aspects include:

- Modeling the interaction effects to predict steel chemistry

- Trials and fine tuning

- Formulation of the optimization problem to arrive at optimal FA additions

Modeling the interaction effects to predict steel chemistry:

The steel-making process that a plant adopts will influence FA consumption and, subsequently, the FA-addition pattern. All plants must operate within tight constraints, with a final steel chemistry that lies between specific upper and lower limits typically based on the grade and type of steel the customer seeks.

Feature Engineering: influencers and their impact on recovery

For the accurate formulation of a model that will predict proper FA inputs, the following influencers must be understood, and the corresponding features designed:

- Metallurgical parameters: The amount of dissolved oxygen in steel dictates the loss of alloys such as silicon, aluminum, and carbon—all of which have strong oxygen affinity. The recovery of these alloys (the degree of their presence in the final steel product) varies significantly depending on the oxygen potential of the specific liquid steel formulation.

- Process parameters: Because the slag that sits on the surface of molten metal contains oxides, its condition plays a vital role in the recovery of alloys.

- Dynamic heat conditions: The purging flow rate of inert gases such as argon and nitrogen help in the homogenous mixing of alloys in steel, while the extent of purging plays an important role in the amount of FAs dissolved in the steel.

Create homogenous clusters

To achieve scale and avoid the development of individual models for each grade of steel, we recommend the creation of homogenous clusters—grades of steel that are grouped together based on shared similarity of steel chemistry. These clusters can then be modelled together. In doing so, it is imperative to strike the right balance between accuracy and scale, a determination that is typically carried out in conjunction with business owners. Given the high number of alloys, we recommend reducing each alloy to its elemental form as a way to generate more information and address data scarcity.

The Random Forest algorithm

Based on the pipeline of data and new features, we recommend comparing various algorithms and choosing the one that best balances complexity tradeoffs and accuracy to predict the optimal output chemistry. Individual models can be built to predict the chemistry for each FA. We have found that Random Forest achieves the best results, delivering consistent performance across non-linear data sets. (See below: White-boxing Random Forest equations.)

Note: In the final model basis, the important features are evaluated on data not used for training. This precaution ensures stability when deployed in production. Multiple metrics are used to evaluate model performance on train data, followed by test data and unseen data such as trials.

Trials and fine tuning

Before starting the optimization build, we recommend studying your model predictions for applicability to live heats. These studies should typically last for 1-2 months to ensure robustness of the predictions given current heat conditions. Rapid trials for testing each hypothesis are followed by multiple discussions with business owners to identify the root cause of any prediction errors. Based on the discussions, new features should be built into the model to improve accuracy of the chemistry prediction. We also recommend checking regularly for appropriateness and applicability of the predictions. As a general rule, the model should be checked once every six months—or more frequently if necessary.

Formulation of the optimization problem to arrive at optimal FA additions

Creating an optimal ferro-alloy recommendation requires leveraging the relations from the predictive model to arrive at an FA combination that will achieve the target chemistry at minimum cost. This scenario results in two complex problems:

- With a solution pool of more than 1,500 possible combinations, a heuristic approach would take hours or, perhaps, days.

- Most optimization problems are modeled and solved using pre-defined equations between the decision variables and the process parameters. If the relationship is unknown and an ML model is used to derive the interactions, the ML model must be converted to feed as input to the optimization model.

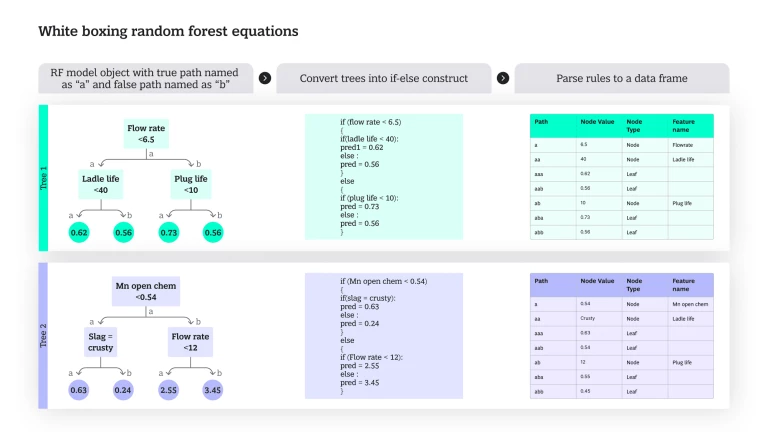

White-boxing Random Forest equations

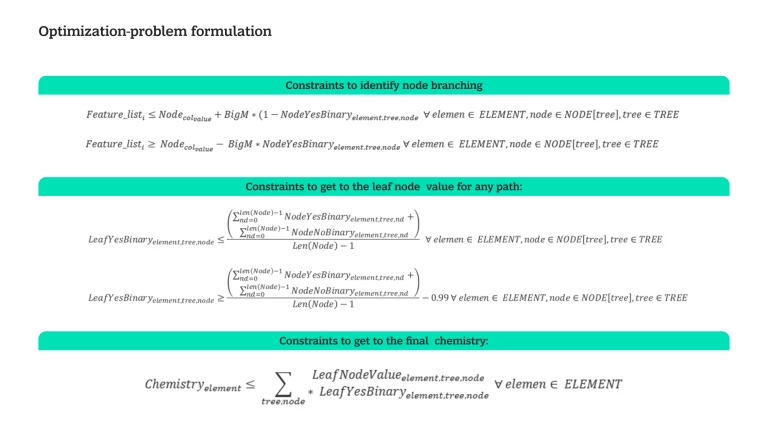

Optimization problems have four components: decision variables, the objective, the bounds, and the constraints. To define constraints, Random Forest must be white-boxed by converting branches to constraints. This involves converting each decision into if/else rules and parsing into a pandas data frame. The following image depicts the steps to convert an RF pickle object into a panda data-frame. The row of the data frame is converted into constraints, which are then fed as input into a commercial solver such as CPLEX to arrive at the optimal FA additions.

Optimization-problem formulation

The number of decision variables and constraints in the optimization model can be represented by the following formula:

- Number of constraints = (# of nodes in 1 tree) x number of trees x number of models x 4. For example, assuming 63 nodes per tree, 200 trees, and predicting five elements with a depth of 5, we will have approximately 63 x 200 x 5 x 4 = 252,000 constraints.

- Number of decision variables = (# of features) x predictive models x (2 constraints for each USL, LSL, and Aim). Assuming five models with 15 features in each, we will have approximately 5 x 15 x 6 = 450 decision variables.

Given that this formulation is computationally intensive to leverage an open source solver, we recommend the use of a commercial solver such as Gurobi or CPLEX to tackle this complexity and solve a mixed-integer problem.

Depending on the viability of either one of these solver options, a choice can be made and integrated with a python predictive model, which is then triggered whenever a new heat is processed. This warrants a fully automated solution, with each step validated with the help of trials. Also, given that every steel-making plant has a unique fingerprint, every plant-specific constraint must be accounted for to realize the full benefit of this approach.

Results: Potential unlocked and the way forward optimized

The solution we have outlined above can help kickstart the digital transformation journey of any manufacturer, whether it is engaged in the production of steel, paint, chemical fertilizer, or any other similarly complex product. The saving accrued from such a journey can be invested to make additional improvements in the digital infrastructure.

In any of these industries, impact can be measured by the reduction in the number of testing samples required to achieve the target chemistry. This reduction can lead to shorter process times that, in turn, can help increase the number of batches produced each day. The more precise targeting made possible by digitization can also reduce the cost of expensive inputs, such as ferro alloys in the case of steel production.

The combination of predictive analytics and optimization will enable manufacturers in many industries to find new ways to reap the benefits of digitization. The journey can be capitalized through agile development and deployment of analytical solutions, giving technologically mature manufacturing plant a sustainable competitive advantage.